Designing, deploying, and scaling a new data center is a time- and capital-intensive process that can take 12 to 18 months. However, new network designs, processes, and technologies can help teams create repeatable models that scale. As a result, it is becoming easier, faster, and less expensive for owners and operators to roll out new data center capacity and handle more workloads at existing facilities. A LinkedIn best practice guide defines scalability as “the ability of a data center to handle increasing or decreasing workloads without compromising on quality or service level agreements.” By planning for scalability now, data center owners and operators can capture more business growth, flexibly responding to internal and external customers’ needs for more workload processing.

This article provides best practices for planning for scalability in data center design. By reading it, data center owners and operators can future-proof designs and operations, ensuring they can scale to accommodate more workloads and changing business requirements.

The article covers:

- Understanding data center scalability requirements: Data centers must support business growth with scalable capacity and operations. They can do this by determining current and future workload requirements and leveraging emerging technologies to automate and improve processes.

- Designing infrastructure for scalability: Teams can harness modular data centers, scalable rack configurations, and scalable servers to speed the deployment of new capacity and use equipment for different workload requirements.

- Deploying redundant systems to ensure high availability of applications: Mission-critical and digital businesses need continuous power. Data center teams create redundancy at multiple levels, including power, network, and data storage, to keep operations up and functioning in all conditions.

- Doing effective network planning: Bandwidth-intensive applications gobble more traffic and resources. Data center teams can supply it by using high-speed interconnects to link data centers, designing network topologies for scalability, and implementing load-balancing strategies.

- Deploying storage solutions for scalability: Distributed storage systems and scalable file systems provide data center teams with options for storing data at different tiers, balancing availability, performance, and cost goals.

- Simplifying operations with virtualization and hyperconverged infrastructures (HCIs): Virtualization enables teams to consolidate footprints and improve resource utilization. HCIs bundle compute, virtualization, storage, and networking in a single cluster, making infrastructure easier to manage and scale.

- Leveraging microservices and containerization: Teams use microservices, containers, and orchestration processes to simplify management, speed DevOps processes, and improve operational stability.

- Considering data center energy efficiency and environmental objectives: Increasing energy efficiency is a key goal for data center teams, as it reduces costs and improves the sustainability of processes. Teams use green data center designs, sustainability insights, renewable energy, innovative cooling systems, and power usage effectiveness metric improvements to future-proof business models and scale.

- Implementing performance monitoring: Monitoring and management tools help teams do better capacity planning and continually optimize operations.

- Harnessing automation for scaling: Small teams can use Infrastructure as Code (IaC), automation, and automated scaling to manage growing IT environments while increasing process consistency.

- Building security into scalable data center designs: Data center teams must meet industry, regional, and customer requirements to keep data secure. They can do so by using access controls, encryption and various software products to improve security and meet compliance requirements.

Understanding Data Center Scalability Requirements

Recent market trends have increased enterprise demand for data center capacity. Over the past few years, enterprises have accelerated the pace of digitization. During the pandemic, companies digitized products and services and enabled remote workforces, leveraging more cloud infrastructure and expanding core-to-edge networks. Now, leaders and customers view digitization as a required competency and essential to keep pace with peers and competitors. More recently, the launch of ChatGPT, Bard, Claude, and other large-language model platforms has increased enterprise demand for generative and discriminative AI training and inference workloads. About 20% of current data center capacity is used for AI workloads, states IDC.

According to a report from JLL, enterprises are competing with colocation facilities and hyperscalers for new capacity in most markets due to the AI craze. Data center development companies have sold out primary market capacity, are expanding secondary markets, and are opening up capacity in tertiary markets. That means that enterprise teams must plan far ahead if they want to lease new capacity. Alternatively, they can scale existing and new capacity by using these strategies.

Determining workload requirements: Teams will want to assess current versus future workloads to predict growth and resource needs. For example, AI requires more computing power and increases heat loads. As a result, many enterprises will convert rows and rooms to AI workloads in the coming years, requiring new power and cooling strategies. Many of these conversions will happen at edge data centers, enabling teams to ensure data sovereignty and reduce processing latency. Hyperscalers will deploy AI-only data centers with fit-for-purpose technology to support densifying racks and power loads of 50 MW or higher.

Leveraging emerging technologies: Current and emerging processes and technologies that can help teams scale include modular data centers and power skids, redundant systems, high-speed bandwidth, virtualization and containerization, distributed and scalable storage systems, innovative data center designs, remote monitoring, automation, and advanced security.

Read:

Evolving Data Center Management Practices to Keep Pace with Change

Understanding the Impact of Edge Computing on Data Center Management

Designing Infrastructure for Scalability

Data center owners have new options for deploying capacity. Instead of building their facilities, they can leverage standardized, prefabricated systems to speed up the time to deploy capacity.

Using prefabricated modular data centers (PFMs): PFMs offer building blocks of capacity, with integrated power distribution, backup power supply, cooling, and fire suppression. In addition, manufacturers offer pre-built, pre-integrated power and cooling modules and smart cabinets, rows, and aisles that can be deployed in existing white space. These systems are built onsite at manufacturers and transported to data center owners’ desired locations.

Whereas traditional data centers take up to 18 months to deploy, PFMs can be deployed up to 50% faster. As standardized solutions, they also provide a global remedy to the challenge of deploying new capacity at core and edge sites, reducing operational complexity.

Modular data centers have become so popular that 99% of operators say they will be part of their strategy. Almost half said PFMs would account for a quarter of their IT loads in 2023.

Using scalable rack configurations: Data center operators can extend scalability to racks and servers. Scalable racks have an open infrastructure with a standardized rack and power distribution unit (PDU) design that enables teams to source and use hardware components from different vendors. Scalable racks give teams greater flexibility to buy the best equipment and potentially maximize cost savings by purchasing volume.

Deploying scalable servers: Scalable servers, such as Intel Xeon Scalable CPU-based servers, come in different socket configurations (one, two, and four) to improve core performance and throughput. As a result, they can be used for a wide array of applications, including high-performance computing, virtualization, AI, and more.

Do a deep dive:

A Free Guide to Data Center Power

A Free Guide to Data Center Racks

Deploying Redundant Systems to Ensure High Availability of Applications

As any data center team knows, creating system redundancy is key to ensuring application availability and performance. Teams design data centers to have redundancy at multiple levels, including power, network, and storage. Data center tiers govern the level of redundancy required onsite. For example, a Tier 1 data center provides basic infrastructure and relies on a single path for power and cooling. As a result, there is minimal redundancy. Tier 4 data centers, on the other hand, offer the highest level of redundancy and fault tolerance, providing multiple pathways for power and cooling and fully redundant components.

Creating power redundancy: Power redundancy involves duplicating critical infrastructure components and systems to ensure an uninterrupted supply of power in the event of a device failure or grid outage.

Data centers use power distribution technology, including transformers, power distribution units, power cables and connectors, and power breakers, to receive power from utilities, step it up and down, and distribute it to devices and equipment.

They create redundancy by using a backup power supply, including uninterruptible power supplies (UPSs), backup generators, and battery energy storage systems (BESSs), to provide anywhere from a few minutes to several days of power in the event of a grid failure. Automatic transfer switches (ATSs) instantly switch the load between the grid and a backup power source to protect equipment and workload performance in the event of a disruption.

Ensuring network redundancy: Data center teams can create network redundancy using the N model. A data center with an N redundancy level has adequate power and cooling to keep all equipment operational. To create redundancy, operators need to add extra systems. N+1 redundancy means the facility has one extra system beyond core requirements. 2N redundancy means that there is a mirrored system that can take over in the event of failure. Teams can progress beyond 2N to 2N+1 or even higher, depending on their requirements.

Creating data storage redundancy: Ensuring data storage redundancy is key to achieving business continuity and disaster recovery goals. Data is typically replicated in the primary data center region and then to a second region to ensure it is recoverable in the event of a significant disaster in the first location. While data used to be physically backed up and transported offsite, most companies now use cloud services for their scalability and ease of recovery. For example, Microsoft Azure offers multiple data redundancy options for its customers, including its premium geo-zone-redundant storage, where data is copied across three Azure availability zones in the primary region and then to a secondary region.

Leading CMDBs auto-discover companies’ entire storage environment, providing deep visibility into data and backup holdings and helping teams pinpoint and predict bottlenecks that can cause operational issues. By continually improving performance, configuration, and capacity, teams can make sure that data center storage performs as expected. In addition, mapping storage elements enables teams to review replication strategies, so that they can make the best decisions to improve capacity and ensure plans meet business continuity and disaster recovery goals.

Doing Effective Network Planning

Data centers require bandwidth to support a growing array of network devices requiring connectivity and enable ultra-fast data transmission speeds internally and externally. Internally, bandwidth supports LAN and SAN traffic; externally, it supports WAN processes and Internet traffic.

Network Computing recommends that data center teams quantify the total traffic flow through LAN devices, such as firewalls, load balancers, IPSs, and web application firewalls. WAN connectivity is driven by the total number of remote sites and end-users and application requirements. To estimate WAN bandwidth, teams should consider voice and video demands, local or centralized Internet access, and MPLS or SD-WAN service requirements.

Leveraging high-speed interconnects: High-speed optical interconnects link data centers to share data and content, balance workloads, or enable business continuity and disaster recovery plans. These high-speed interconnects can link multiple facilities on a single campus, connect data centers in a metro area, or link data centers across countries with undersea fiber optic cables.

Designing network topologies for scalability: Data centers, which have provided 10 GbE and 40 GbE bandwidth, are moving to 100, 400, and even 800 GbE to handle enterprises’ growing business needs. This fast growth requires a new approach to network topologies.

Network topologies define the physical and logical network structure and how core components are connected. Companies have traded three-tier hierarchical network models for N-tier layered architectures, which distribute application components across different tiers to increase redundancy, and microservices architectures, which separate application components into logically isolated containers that run in clusters.

Large web and cloud providers use massively scalable data center fabrics and topologies such as three-tiered spine-leaf designs or a hyperscale fabric plane Clos design to support the vast number of servers, enabling large distributed applications, according to Cisco. The leaf-spine network technology enables large data centers to optimize connections for east-west traffic, improve application reliability, and scale easily. The Clos design provides nonblocking, any-to-any connectivity that reduces the number of crosspoints enterprises need to enable call switching.

Implementing Load Balancing Strategies

Load balancing takes incoming requests and distributes them across a server farm. Load balancing uses a physical or virtual appliance to determine which server handles the incoming request best. This process avoids overloading resources. It can also be used for failover, redirecting workloads to backup servers if the primary targets fail. Together, these strategies improve network performance and reliability.

Data center teams can use hardware load balancers with built-in software and virtualization capabilities to handle application traffic at scale. Software load balancers run on virtual machines or white box servers, typically as application delivery controllers. Cloud-based load balancing uses its infrastructure to balance network loads, incoming traffic from an HTTP address, or internal traffic. These solutions use algorithms to determine where traffic gets routed.

Deploying Storage Solutions for Scalability

Data center and IT teams have different options for storing data. They typically tier data based on how it is used to reduce storage costs. Options include:

Distributed storage systems: These software-defined systems allocate data storage across different servers and data centers. Used by hyperscalers to create massively scalable cloud storage systems, distributed storage systems can store files, data in block storage, and data in object storage. In addition to providing businesses with greater flexibility, distributed storage systems improve performance, scalability, and reliability. Distributed storage systems can be used across public, private, and hybrid clouds.

Scalable file systems: These systems distribute data across servers, providing data storage that’s easily accessible, available, scalable, and secure. Scalable file systems also provide transparency, a security mechanism that protects file system data from other systems and users. This means shielding the direct file system structure and which files are replicated, enforcing naming conventions, and only enabling user access after secure logins, according to TechTarget.

Tiering data: Data is typically classified as mission-critical, hot, warm, and cold and is then assigned tier levels ranging from Tier 0 to Tier 3 or even greater.

According to TechTarget, mission-critical data needs to be instantly available and thus is stored in high-performance, high-cost storage media such as solid-state drives or storage-class memory. Hot data, used daily, also needs high-performing storage, such as solid-state drives, hard disk drives, and hybrid storage systems. Warm data that is accessed less frequently can be stored in hard disk drives, backup appliances, or as tape or cloud storage. Finally, cold data is rarely used and thus can be stored in slow-spinning hard-disk drives, optical discs, tape storage, or archival cloud storage, which reduce storage costs.

How Virtualization and Hyper-Converged Infrastructures Simplify Operations and Support Growth

Most data center teams use virtualization because it enables them to consolidate their footprint while gaining other business benefits. With virtualization, software is used to abstract a resource away from its software and hardware. Teams can virtualize networks, servers, storage, data, desktops, and applications using hypervisors. This enables teams to:

- Use virtualization to optimize resources: Instead of deploying myriad new devices to support business growth, teams can consolidate resources. Hardware elements can be divided into multiple virtual machines, each with an operating system. This improves server utilization, a long-time challenge for data center teams. With fewer devices, teams can also reduce power and cooling costs. However, running virtual machines still requires significant RAM and CPU resources.

- Increase business flexibility by using virtualization: Virtualization improves flexibility because it can be used on-premises and in the cloud, virtual machines can be flexibly allocated and moved across physical resources, and teams aren’t dependent on specific hardware vendors. In addition, virtualization makes it easier for teams to scale workloads up and down as needed. However, teams still face software development and management challenges because they manage more virtual machines and operating systems.

- Create hyper-converged infrastructures (HCIs): HCIs create building blocks of compute, virtualization, storage, and networking. HCIs abstract network and computing resources, provide a single management platform for all resources, and enable the automation of key tasks. As a result, teams can easily deploy any workload, scale infrastructure, and manage hybrid cloud environments.

Leveraging Containerization and Microservices

Containerization takes virtualization a step further. A form of virtualization, containerization uses software to isolate key application components from underlying devices. It bundles application code with all the files and libraries needed to run on any infrastructure. However, containerization enables teams to run multiple operating systems (OSs) within a single instance of an OS. As a result, it is even more efficient and scalable than virtualization.

Using container orchestration to speed development processes: DevOps teams use lightweight, portable, open-source containers from companies like Docker, Linux, or Kubernetes to build applications. Teams can store multiple containers on the same physical or virtual machine if they run the same operating system.

Container orchestration automates containerized applications’ provisioning, deployment, scale, and management without considering underlying infrastructure. As a result, containers speed up and simplify DevOps processes while increasing operational consistency and infrastructure stability.

Improving security with containers: Containers offer multiple security benefits. They are ephemeral devices: quickly created and frequently replaced. They also isolate applications and have integrated security capabilities. However, given their increased adoption, containers are also a top target for hackers. As a result, teams should follow the National Institute of Standards and Technology’s recommendations for improving container security. These recommendations include using container-specific host OSs; grouping containers with the same purpose, sensitivity, and threat posture together on a single host OS kernel; adopting container-specific vulnerability management tools and processes; adopting hardware-based countermeasures; and using container-aware runtime defense tools.

Harnessing microservices: Using microservices is a software engineering approach that breaks down applications into small, independent services that communicate via an interface and lightweight APIs. Microservices are loosely coupled but can be independently deployed. DevOps teams use containers and microservices to speed software development, testing, and deployment cycles.

Data Center Energy Efficiency and Environmental Considerations

Data centers consume 1% of the world’s electricity. However, as more companies adopt AI, the electricity required to power global data centers could increase by 50% by 2027 for this advanced technology alone.

Data center owners and operators can use multiple strategies to improve energy efficiency, reducing consumption and costs. These strategies include:

- Implementing green data center designs: Green data center design is a broad term encompassing environmentally friendly processes and materials. Teams can “green” data center deployment using PFMs to gain essential capacity, eliminating the need to overbuild facilities and power and cool little-used equipment. They can choose which material they use to construct data centers — from mass-timber-framed PFMs to low-carbon concrete for traditionally built facilities. They can also use specialty carpets and paints to reduce carbon emissions further.

When deploying or upgrading equipment, teams can use their CMDBs to identify little-used servers and other technology — and decommission them. They can also deploy energy-efficient servers; virtualize servers, storage, and other resources; and recycle electronic waste. - Leveraging sustainability insights: Leading CMDBs automatically discover all IT assets that consume power. Data center teams can use this information to benchmark power usage, identifying inefficiencies that can be addressed to reduce energy usage. In addition, these CMDBs display carbon emissions by business application, customer, vendor, and physical location, enabling teams to make strategic decisions about their business.

With Device42’s sustainability insights customers have been able to reduce power costs and their carbon footprint by 30% on average. They can funnel cost savings back into the business, while sharing sustainability gains with key stakeholders.

- Using more renewable energy: Data center operators can improve the environmental sustainability of operations by using more renewable energy, such as solar or wind power. Strategies include using battery energy storage systems to create an always-on source of backup power or entering into physical or virtual power purchase agreements (PPAs) to build renewable energy systems onsite or access them from a third-party provider.

- Harnessing innovative cooling systems to scale: Most data centers rely on air cooling — although that is changing with AI demand.

Data center teams can optimize air cooling by implementing computer room air conditioners, hot and cold aisles, energy-efficient heat pumps, and evaporative cooling.

Many companies will implement direct-to-chip liquid cooling systems to handle the higher heat loads that AI workloads produce. However, other approaches include immersion cooling systems in data centers or even data centers that are built completely underwater, like Microsoft’s Northern Isles data center.

- Improving power usage effectiveness (PUE) metrics: PUE is a commonly used industry metric that quantifies power efficiency by dividing the total power consumption of a data center by the energy its IT equipment consumes. The average PUE metric for data centers is 1.58, but leading hyperscalers can achieve values much closer to 1.0, an ideal score.

Learn more:

Data Center Carbon Footprint: Concepts and Metrics

How to Make Your Data Center More Energy-Efficient

Maximize Data Center Energy Efficiency By Calculating and Improving Power Usage Effectiveness (PUE)

How Data Centers Can Use Renewable Energy to Increase Sustainability and Reduce Costs

Increasing Data Center Energy Sustainability: Short- and Long-Term Strategies

Enabling Performance Monitoring and Management

Performance monitoring and management tools enable small teams to scale to support global networks. That’s especially important as more companies operate mission-critical businesses requiring high uptime and exceptional performance of applications. Being able to identify and address anomalies proactively enables teams to prevent unplanned downtime and improve service quality over time.

Doing effective capacity planning: These tools also enable users to review infrastructure and bandwidth performance so that they can plan future capacity proactively. Teams can use tools such as discovery and dependency mapping to depict all physical and logical components, their relationships, and interdependencies, along with demand metrics, to plan growth. This data can reveal a need for adding new devices to address performance bottlenecks, applications that are good candidates for cloud migration, and more.

Harnessing Automation for Scaling

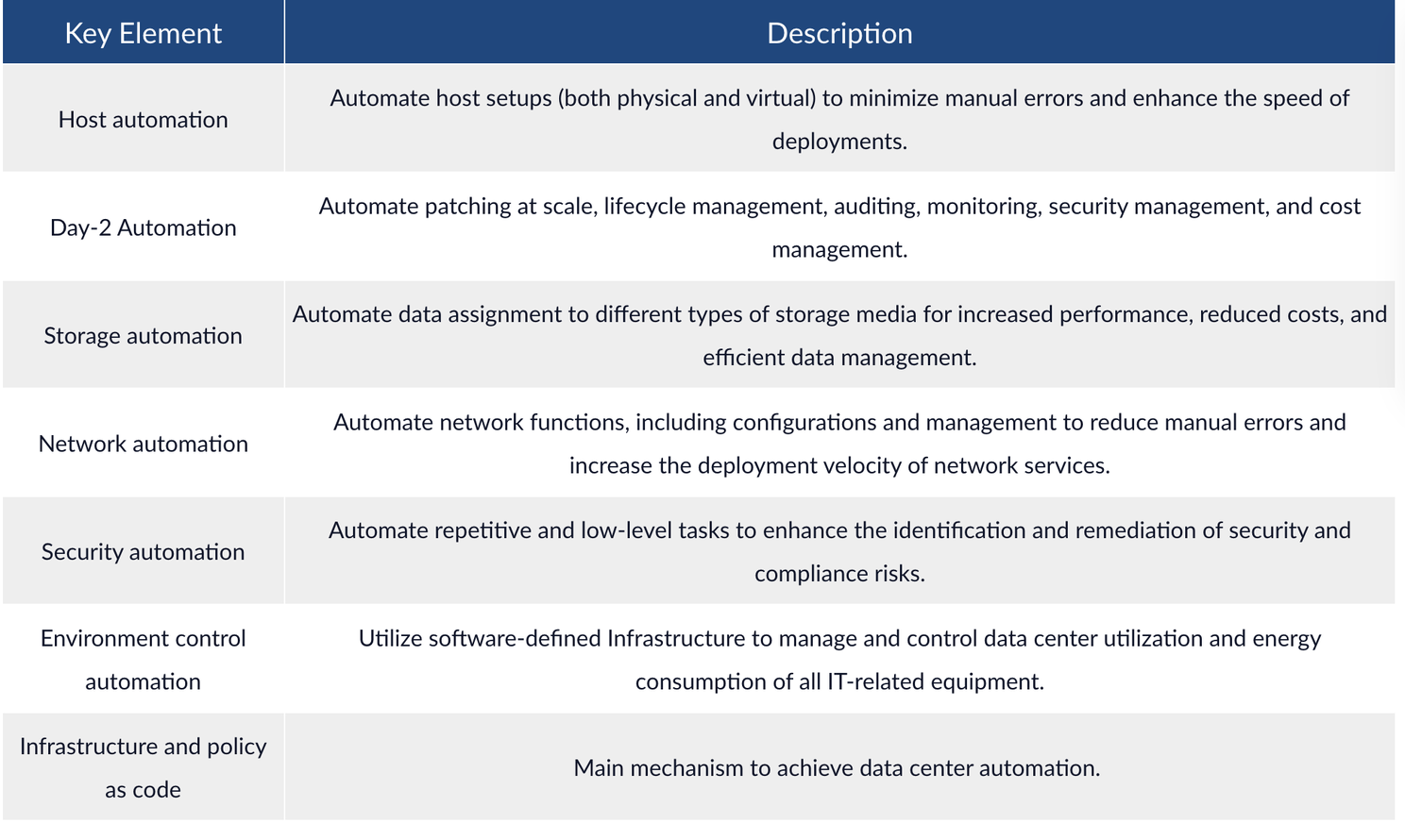

Automation is a boon to busy data center and IT teams, enabling them to streamline routine tasks while aligning processes to business policies and improving consistency and control. Practices include:

Using Infrastructure as Code (IaC) Practices: Teams that use IaC leverage code and automation to manage and provision infrastructure instead of doing these tasks manually. They follow standards and best practices to create configuration files with key specifications, ensuring processes are followed consistently across jobs. IaC breaks down infrastructure into modular components that are easier to manage with automation, which they can do with tools like the Red Hat® Ansible® Automation Platform. By using version controls, testing, and deploying code, teams improve infrastructure stability while streamlining key processes.

Leveraging automation for routine operational processes: As this image from CloudBolt indicates, data center teams can automate a wide range of processes, including host setups, patching, data assignment, network functions, security processes, and environment controls.

Source: CloudBolt

Using automated cloud scaling policies: Just like the public cloud, private cloud and on-premises workloads enable automated scaling, adding or subtracting computing, memory, and networking resources. This capability improves enterprise response to demand changes.

Autoscaling enables companies to easily support growth or respond to demand spikes with burstable capacity. Teams can control how much capacity is added by establishing baselines and setting maximum limits. Autoscaling improves application performance and service quality, and capacity can be added on a reactive, predictive, or scheduled basis. With predictive analytics, cloud services use AI and machine learning to determine when more capacity will be needed and then proactively provide it.

Read:

CMDB in the World of Infrastructure as Code

Building Security into Scalable Data Center Designs

Whether capacity is at the core, cloud, edge, or in between, data center teams want to protect systems and data from unauthorized access. Best practices for ensuring data center security include:

- Addressing compliance considerations: Enterprises must meet rising customer, industry, and regional requirements and regulations to ensure data privacy and security. They can use dependency mapping to depict data flows, such as how data is transmitted, stored, and used to ensure processes adhere to key regulations. CMDBs automate reporting and provide auditable processes that IT teams can use to report to internal compliance and audit teams and external regulators.

- Using access controls: Enterprises use access controls, such as least privilege granted and role-based controls that direct what users can do. For example, role-based controls can dictate which types of devices users can view, access, and change and whether that access is global, regional, or via a business unit.

- Encrypting all data: Data should be encrypted, whether it is at rest or in transit. ISACA recommends that applications and databases be compatible with the latest Secure Sockets Layer/Transport Layer (SSL/TLS) methods. In addition, enterprises should keep SSL certificates up-to-date and change encryption keys periodically.

Leading hyperscalers publish their advanced data encryption policies to demonstrate how they protect customer data. For example, Google encrypts data before it is stored and then encrypts it at the distributed file system, database, and file storage; hardware; and infrastructure layers. The company breaks data into subfile chunks for storage, encrypts each chunk at the storage level with an individual data encryption key, and updates keys when data chunks are updated.

Explore:

Implementing and Managing a CMDB: Security and Compliance Considerations

Use These Strategies to Plan for Scalable Data Center Designs and Operations

Enterprises today are driven by data, cloud services, and increasingly AI and machine learning models. As a result, they’re becoming even more reliant on data center capacity to enable digital business processes, fuel growth and ensure operational stability.

By using the strategies and best practices outlined in this article, teams can create innovative data center designs and automate and standardize key processes. With cost-efficient, effective processes that scale to meet new business demands, data center teams contribute to revenue growth while reducing operational costs over time.

The Device42 solution provides integrated CMDB, data center infrastructure management (DCIM), IT asset management (ITAM), IP asset management (IPAM), and SSL certificate and software licensing management capabilities. By deepening visibility into all hybrid cloud assets, their changes and configurations, their dependencies, power consumption, carbon footprint, and licensing costs, teams can make the best decisions on how to operate, grow, and transform enterprise infrastructures.