Threat and Vulnerability Management Best Practices

Threat and vulnerability management is no longer optional but a core part of any serious security program. Whether the issue is a misconfigured cloud service or an unpatched legacy system, risks evolve quickly, often faster than security teams can respond.

Many organizations still treat vulnerability management as a reactive exercise, moving from one scan to the next without a clear plan. This approach may have worked in more predictable environments, but it falls apart when faced with the complexity of today’s hybrid infrastructures, fast-paced development cycles, and an ever-expanding threat landscape.

This article is for cybersecurity professionals aiming to strengthen their vulnerability management approaches. It focuses on practical strategies such as automating asset discovery, maintaining an accurate configuration management database (CMDB), classifying assets by business criticality, prioritizing vulnerabilities by real impact, and building consistent workflows. These practices reflect real-world experience and align with frameworks including NIST SP 800-40, ISO/IEC 27001, and the Essential Eight. While advanced tactics like red teaming play an important role, this article focuses on visibility, context, and control as the foundation of an effective vulnerability management program.

Summary of threat and vulnerability management best practices

| Best practice | Description |

|---|---|

| Automate asset discovery | Automate discovery processes to ensure continuous visibility and eliminate blind spots that hinder remediation. |

| Maintain an effective CMDB | Maintain an accurate CMDB to map vulnerabilities to impacted assets and dataflows, improving prioritization and reducing containment time. |

| Classify assets by criticality | To align remediation with risk and compliance goals, classify systems based on business impact and sensitivity. |

| Prioritize vulnerabilities by context | Go beyond the Common Vulnerability Scoring System (CVSS) by including exploitability, exposure, and asset value in the prioritization process. |

| Establish a repeatable workflow | Build a consistent vulnerability lifecycle to manage risk and maintain accountability across teams. |

| Validate patches before rollout | Test patches before wide deployment to prevent outages and ensure that remediation is both safe and effective. |

Automate asset discovery

In modern environments, assets are no longer limited to static data centers. They extend across cloud accounts, container platforms, remote endpoints, and even unmanaged IoT devices. In most environments today, asset sprawl is the norm, not the exception.

Without automated discovery, many of these assets remain invisible to the security team. Developers may spin up temporary workloads for testing, contractors might connect personal laptops to corporate networks, and infrastructure-as-code pipelines may deploy services that never get properly registered.

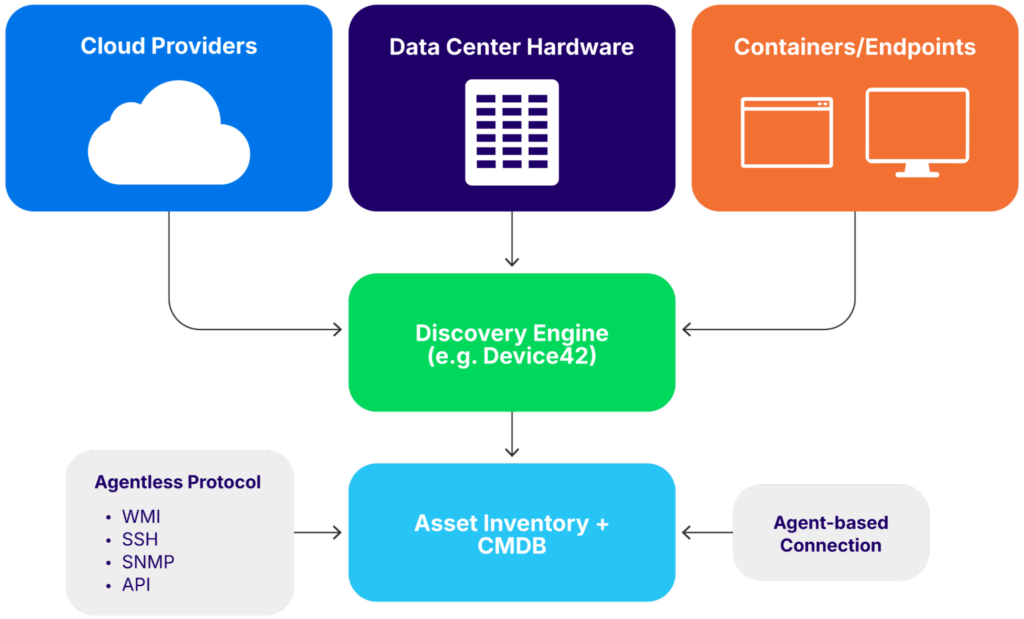

The solution begins with automated, continuous discovery, which ensures that any asset—whether physical, virtual, or cloud-native—is identified and tracked from the moment it becomes active. There are two main approaches to this.

Agentless discovery relies on protocols such as WMI, SSH, SNMP, and APIs to gather data remotely. It is easy to deploy and does not require an agent to be installed on endpoints, but it may miss assets in locked-down environments or behind firewalls.

Agent-based discovery involves installing lightweight software on each asset. This method provides deep insights into configuration, software versions, running processes, and network activity. However, it introduces more operational overhead, particularly in diverse or remote environments.

The most effective strategy often combines both. Use agentless methods for broad visibility and agents where precision is required, such as on critical servers or laptops that leave the office network.

Hybrid asset discovery in modern environments

Asset discovery solutions that support hybrid methods can integrate with cloud providers, support both agentless and agent-based approaches, and enable continuous asset tracking across dynamic environments. Solutions like Device42 that support automated, application-aware discovery are particularly effective when embedded into provisioning workflows and CI/CD pipelines to ensure that all assets are consistently tracked and accounted for from the moment they are deployed.

Maintain an effective CMDB of discovered assets

Asset discovery provides visibility, but a configuration management database (CMDB) transforms that visibility into actionable understanding. An effective CMDB not only tracks what assets exist but also maps how they are connected, who owns them, and what business functions they support.

Unfortunately, many CMDBs fail because they are treated as static documentation. Without integration with discovery tools and operational systems, they quickly become out of date. When that happens, teams end up making security decisions based on incomplete or inaccurate information.

A CMDB must be dynamic, accurate, and deeply integrated into both IT operations and security workflows. It should reflect changes in real time, not weeks or months later. This is especially important for vulnerability management: It is essential to understand what systems are affected, how those systems relate to others, and what the business impact of an issue might be.

For example, identifying a vulnerability on a Linux server is only part of the picture. It is also important to know if the server runs a customer-facing application, sits behind a load balancer, or stores regulated data. This context is what turns a vulnerability from a low-priority ticket into a risk that requires immediate attention.

Look for CMDB platforms that support application-aware discovery and CMDB population with minimal configuration, enabling seamless integration into existing operational workflows.

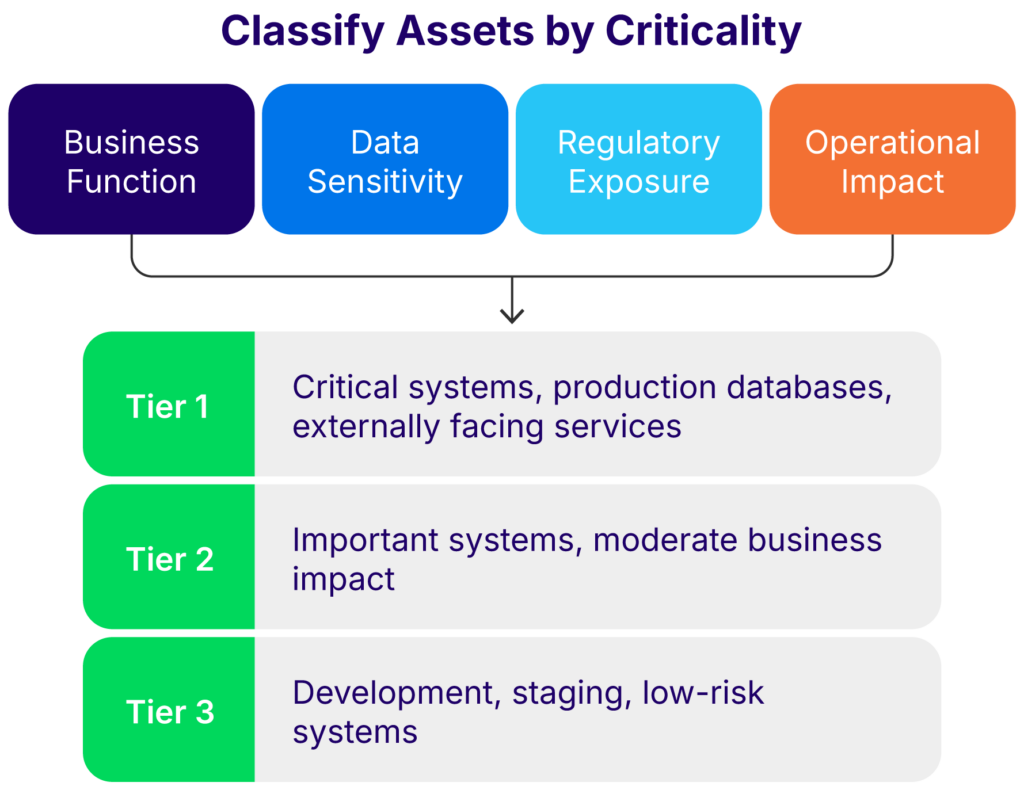

Classify assets by criticality

Not every asset requires the same level of attention. Some systems store sensitive data, others support critical business processes, and some are limited to non-production test environments. Knowing which assets matter most is essential for prioritizing effort and reducing risk effectively.

Asset classification means assigning each asset a risk profile based on criteria such as:

- Business function

- Data sensitivity

- Regulatory exposure

- Operational impact

Most organizations adopt a three-tier model:

- Tier 1: Critical systems, including production databases, financial platforms, and externally facing services

- Tier 2: Important systems with moderate business impact or sensitive internal access

- Tier 3: Development, staging, or low-risk infrastructure

Classifying assets helps align the vulnerability management process with compliance requirements and business risk tolerance. It guides decisions about patch urgency, exception handling, risk acceptance, and change approval workflows.

For example, a vulnerability in a Tier 1 asset might trigger a same-day remediation, complete with change control and validation. A similar vulnerability in a Tier 3 asset might be addressed during the next scheduled maintenance cycle.

Aligning asset classification with business and compliance priorities helps you avoid unnecessary effort on low-impact issues and reduces the risk of incidents that could lead to regulatory penalties or damage trust.

Prioritize vulnerabilities by context

CVSS scores are a helpful starting point, but they shouldn’t be the final word on risk. Too often, organizations focus on high-severity scores while overlooking lower-severity issues that pose greater real-world threats.

Contextual prioritization considers additional factors such as:

- Exploit availability and maturity

- Asset exposure (internal vs. internet-facing)

- Business criticality

- Data classification

- Compliance scope

As an example, a known-exploited 6.5 vulnerability on a public-facing authentication server could pose a greater risk than a 9.8 vulnerability on a retired internal file server.

Several tools support this kind of contextual analysis. CISA’s Known Exploited Vulnerabilities (KEV) catalog is a reliable starting point. The Exploit Prediction Scoring System (EPSS) provides probabilistic estimates of exploitation likelihood. Threat intelligence platforms also add value by surfacing relevant indicators and risk scores.

Contextual prioritization can be implemented manually through well-defined playbooks or automated using risk-based vulnerability management tools that incorporate exploitability, asset exposure, and business impact into scoring.

The goal is to direct limited resources toward the vulnerabilities that truly matter. This approach improves remediation efficiency, reduces alert fatigue, and supports clear justification of prioritization decisions for auditors and stakeholders.

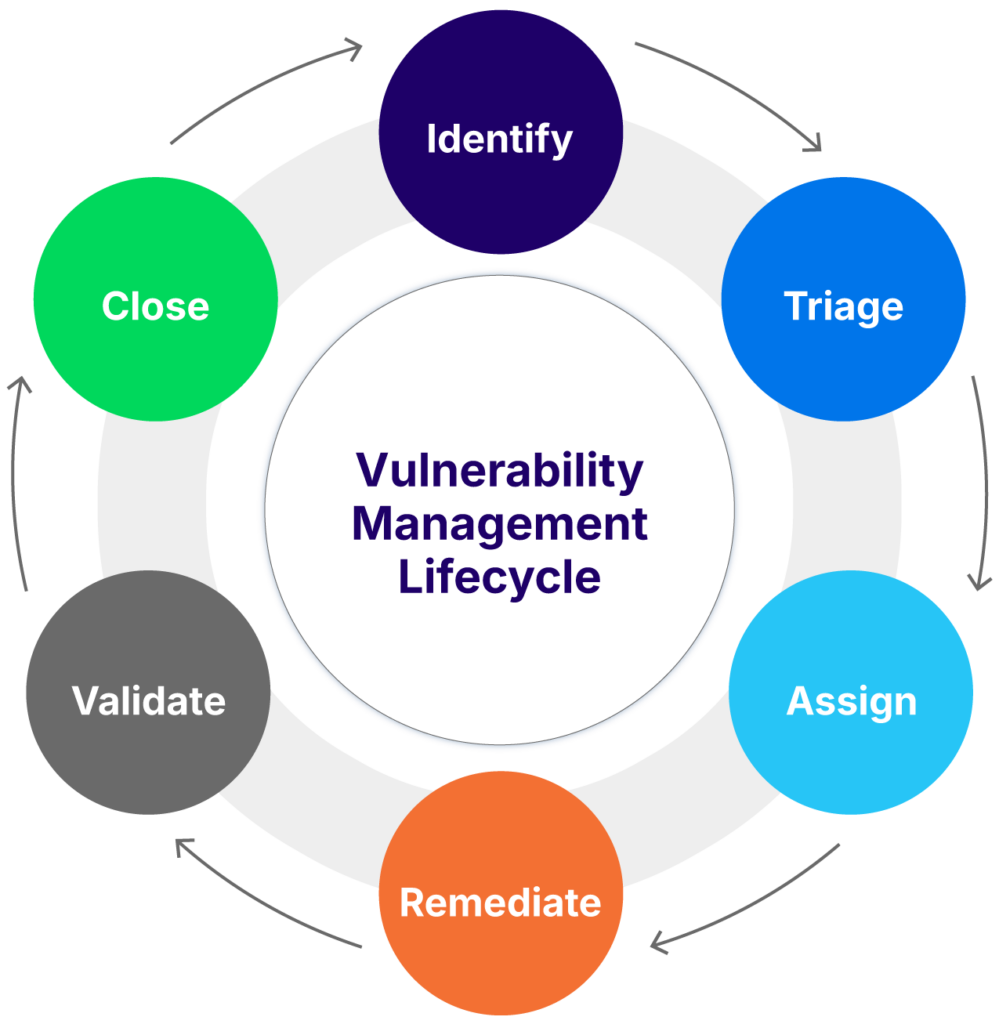

Establish a repeatable workflow

Without a consistent process, even the best tools and data lose effectiveness. Vulnerability management should follow a well-defined lifecycle that spans identification, analysis, remediation, validation, and closure.

A repeatable workflow might include these steps:

- Identify: Use scanners, threat intelligence, and external sources to detect vulnerabilities.

- Triage: Validate findings, eliminate false positives, and assess severity.

- Assign: Route issues to the appropriate owners based on the CMDB.

- Remediate: Apply patches, configuration changes, or other corrective actions.

- Validate: Confirm that the fix is effective and does not introduce new issues.

- Close: Document the outcome, update records, and track metrics.

This workflow should integrate with IT service management platforms to support accountability, enable progress tracking, and align remediation with change control and compliance requirements.

Maintaining a consistent workflow across teams strengthens coordination between security and IT operations, minimizes delays, and ensures that critical vulnerabilities are addressed promptly. It also simplifies audit readiness and enables continuous improvement by making performance easier to measure and refine.

Validate patches before rollout

Pushing patches into production without proper validation is a frequent cause of avoidable outages. Even well-tested vendor patches can introduce compatibility issues, break application functionality, or impact system performance.

Validation is especially critical for systems that are customer-facing or handle sensitive data. Before deployment, patches should be tested in a staging environment that closely replicates production. Monitor for regressions, unexpected behavior, or performance issues during testing.

Whenever possible, use a phased rollout strategy. Begin with a small group of low-risk systems, monitor the results, and expand deployment gradually. Always have a rollback plan in place in case something goes wrong.

In February 2025, a DNS misconfiguration during an IPv6 infrastructure update in Microsoft Entra ID (formerly Azure Active Directory) resulted in the accidental removal of critical CNAME records used by seamless single sign-on (SSO). This change led to a global authentication outage lasting over 90 minutes and impacted multiple Microsoft services, including Azure SQL Database, Azure DevOps, and Azure OpenAI. Although not caused by a patch, the incident illustrates how insufficient validation of configuration changes in identity systems can introduce widespread operational risk.

Validation reduces the risk of operational disruption, improves trust between teams, and ensures that remediation actions address the intended risks without creating new ones.

Conclusion

Threat and vulnerability management is not limited to scanning and patching. It involves building a system that provides visibility into the environment, highlights what matters most, and enables actions that meaningfully reduce risk.

These practices help shift vulnerability management from reactive responses to strategic risk management. A consistent workflow, supported by proper validation, ensures that remediation efforts are effective and sustainable. The ultimate goal is operational clarity. Knowing what assets exist, why they matter, and how vulnerabilities affect them enables faster, smarter, and more defensible decisions.

Effective security is not just about having the right tools. It requires building strong processes, empowering capable teams, and fostering a shared commitment to protect what matters most.