Best Practices for Asset Tagging in Vulnerability Scanner

Asset tagging is a foundational practice that often determines whether a vulnerability management program will scale with precision or collapse under unmanaged complexity. In the context of vulnerability scanners, asset tagging refers to the consistent application of metadata to assets, enabling better context, filtering, triage, and automation. Without a robust tagging strategy, even the most powerful scanning tools produce fragmented, noisy results that frustrate engineers and delay remediation.

Tagging has evolved significantly over the past decade. What started as a Configuration Management Database (CMDB) hygiene activity has become a critical enabler for automation, policy enforcement, and risk-based vulnerability prioritization. In regulated environments such as government, banking, and healthcare, asset tagging is also essential for compliance audits and reporting. As cloud adoption accelerates, organizations must adapt tagging practices to keep pace with dynamic infrastructure and ephemeral workloads.

This guide focuses on practical asset tagging in vulnerability scanners and other enterprise use cases. It is particularly relevant for those using continuous scanning platforms, such as Tenable, Qualys, or AWS Inspector, and for teams integrating scanner output into ticketing systems or security dashboards. It includes field-tested practices for scaling asset tagging beyond spreadsheets and toward integration with automated detection and response pipelines. Tool comparisons and CMDB implementation fundamentals are intentionally excluded, as this article assumes a baseline familiarity with scanning workflows and IT asset management.

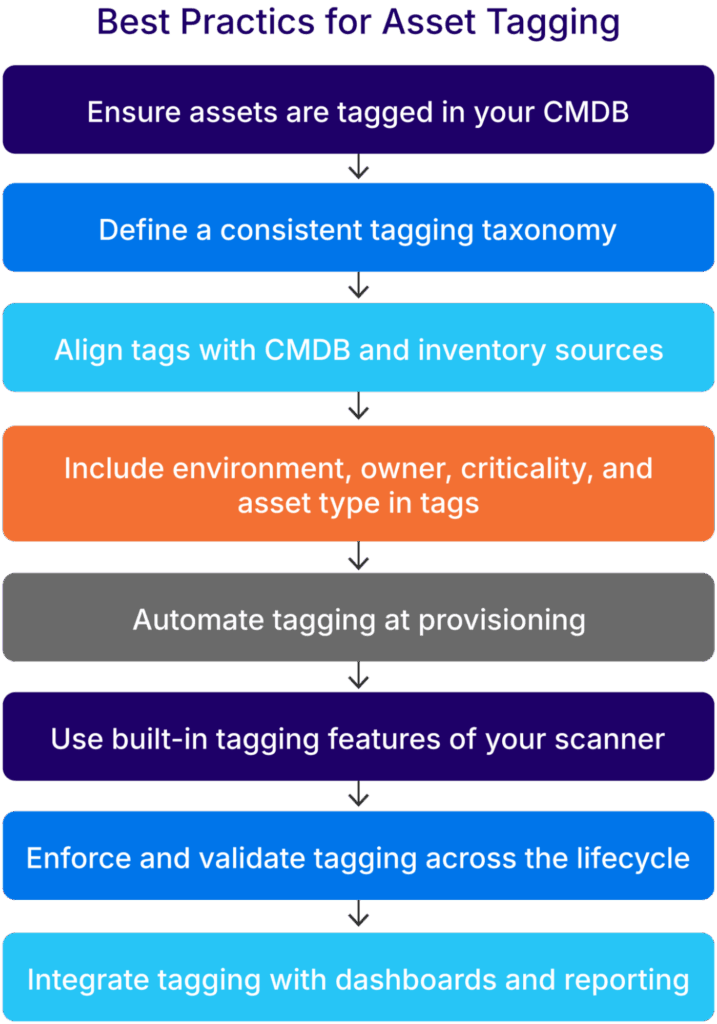

Best practices for asset tagging in vulnerability scanners

The table below summarizes the eight best practices for asset tagging in vulnerability scanners that this article will explore in detail.

| Best Practice | Summary |

|---|---|

| Ensure assets are tagged in your CMDB | Tagging in the CMDB ensures asset visibility, supports scanner correlation, and enables automated remediation workflows. |

| Define a consistent tagging taxonomy | A structured taxonomy avoids confusion and supports long-term manageability. |

| Align tags with CMDB and inventory sources | Prevent scanning mismatches and reduce false positives by syncing all sources of truth. |

| Include environment, owner, criticality, and asset type in tags | These attributes give scanners the context needed for smart triage. |

| Automate tagging during provisioning | Avoid gaps and reduce human error by embedding tags in deployment logic. |

| Use built-in tagging features of your scanner | Extend scanner capabilities by leveraging native tag-based filters and scopes. |

| Enforce and Validate Tagging Across the Lifecycle | Enforce tagging at the source through policy or automation. Once mandatory, shift audits from finding untagged assets to verifying tag accuracy and relevance. |

| Integrate asset tagging with dashboards and risk reports | Tags enable actionable reporting and better alignment between engineering and leadership. |

Ensure assets are tagged in your CMDB

A CMDB is the authoritative inventory in most enterprise environments. If assets are not properly tagged in the CMDB, vulnerability scanners cannot effectively correlate their results, leading to inconsistent reporting and remediation gaps. Accurate tagging enables:

- Asset-to-vulnerability mapping

- Ownership and accountability assignments

- Policy-driven remediation workflows

Organizations should use discovery tools (such as Device42) to enable CMDB automation, pulling in authoritative tag data and maintaining up-to-date asset metadata across environments. Tags should also flow from cloud-native metadata and IaC templates into the CMDB. One common failure point is tag fragmentation, where updates happen in cloud platforms but don’t sync downstream. A bidirectional sync process between scanners and the CMDB reduces duplication and improves visibility across teams.

For example, in many financial services environments, critical production systems are flagged during monthly patch audits but remain unremediated. An investigation reveals that the vulnerability scanner tags them as “test” based on outdated CMDB data. Once accurate tags are restored and automated sync is enabled, remediation timelines drop by 30% in the following quarter.

Define a consistent tagging taxonomy

A poorly defined tagging strategy leads to chaos. Start by standardizing a baseline taxonomy across departments and cloud providers. Fields like env, owner, criticality, system_type, and data_class should be defined centrally and enforced consistently.

Avoid free-text inputs where possible. Free-form values create variation (“prod”, “production”, “Prod”) that breaks filtering logic and inflates dashboard noise. Instead, use controlled vocabularies, dropdowns, and validation checks during asset provisioning.

Example Taxonomy Table:

| Tag Field | Allowed Values | Purpose |

|---|---|---|

| env | dev, test, staging, prod | Separates environments |

| owner | team-abc, team-def | Assigns responsibility |

| criticality | low, medium, high, critical | Supports risk-based prioritization |

| system_type | vm, container, serverless, db | Asset classification |

| data_class | pii, financial, internal, public | Data sensitivity classification |

Example Hierarchical Tag Structure:

org=enterprise-x

├── business_unit=finance

│ ├── environment=prod

│ └── owner=team-finops

├── business_unit=engineering

│ ├── environment=dev

│ └── owner=team-devops

└── business_unit=security

├── environment=staging

└── owner=team-secops

This structure supports inheritance-based logic and easier segmentation in dashboards and reporting tools. Maintaining this taxonomy in version control or as a policy-as-code template ensures consistency across teams.

Align tags with CMDB and inventory systems

With a consistent taxonomy in place, the next step is to ensure that those tags are applied consistently across systems. Misaligned tags between systems create downstream problems. It’s not uncommon to see a scanner identify an asset as “test” while the CMDB tags it as “prod”. This inconsistency leads to incorrect triage and accountability gaps.

Teams should implement routine checks to align asset metadata across platforms.

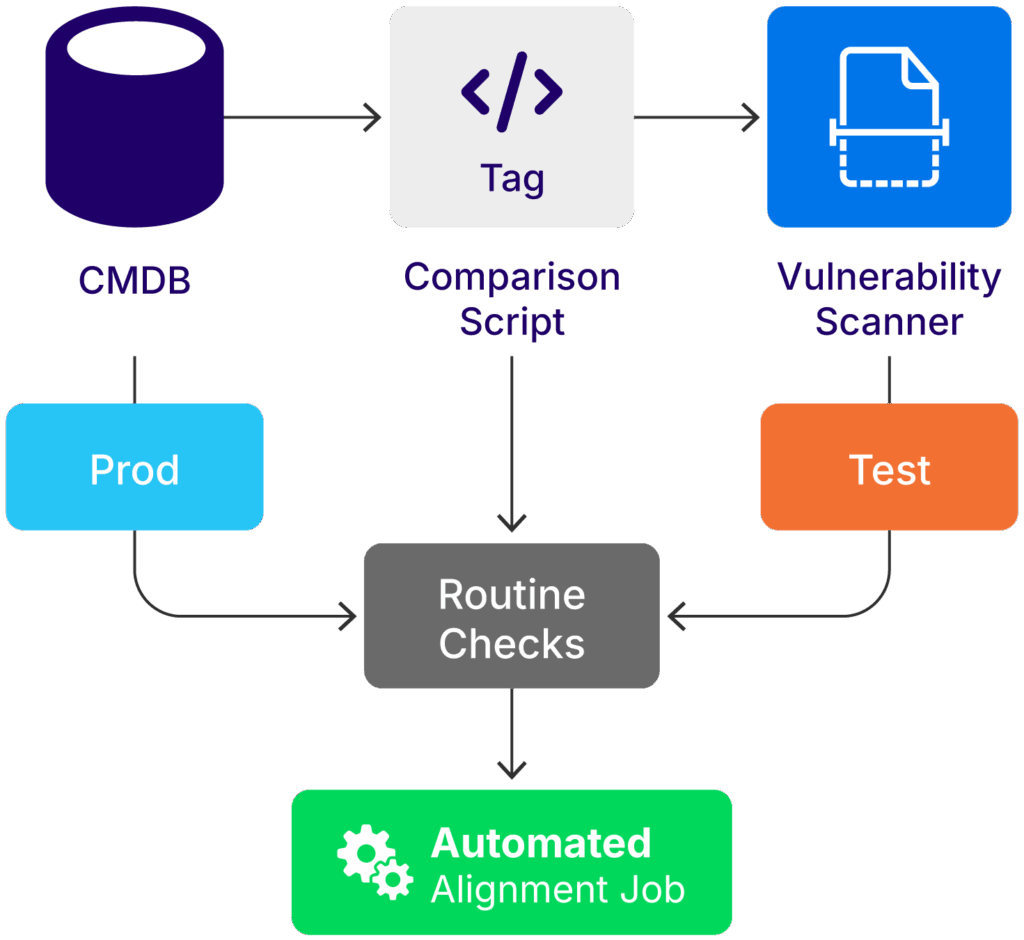

A logical overview of tag comparison between a CMDB and a vulnerability scanner (source)

Teams can schedule the execution of tag comparison scripts to detect mismatches between vulnerability scanners and inventory systems such as Device42. Generating a tag reconciliation report is especially useful during infrastructure changes or after large-scale migrations, as it helps ensure consistent classification and remediation workflows.

Real-world example

During a cloud migration project at a healthcare provider, infrastructure teams discovered that over 18% of assets had conflicting environment tags between their scanner and CMDB. As a result, dozens of production servers were excluded from SLA monitoring. A weekly automated alignment job using AWS Config and the CMDBs APIs corrected discrepancies and restored operational oversight.

In another example, a government agency struggled with persistent false positives during monthly vulnerability scans. An investigation revealed that untagged assets in the scanner were still classified in the CMDB as retired systems. Once the team implemented an automated tagging sync across both tools, the volume of false positives dropped significantly, and remediation SLAs improved.

A platform compatibility matrix can also help track which fields are case-sensitive, character-limited, or support nested logic.

Once alignment is ensured, it’s equally important to select the right tag fields that deliver the most context during triage and reporting.

Include environment, owner, criticality, and asset type in tags

These four core tag fields deliver the highest operational value for vulnerability management.

environment: Helps isolate production systems from dev/test during triage.owner: Enables clear assignment of remediation responsibility.criticality: Supports risk-based prioritization.asset_type: Improves filtering logic across application servers, VMs, endpoints, etc.

In a real-world scenario, a tag filter such as environment:prod AND criticality:high AND patch_age>30d can immediately surface the highest-risk assets. These tags should appear in dashboards, reports, and playbooks to streamline remediation cycles.

Consistent use of these four fields helps structure scanning policies by allowing vulnerability scanners to target specific slices of infrastructure more intelligently. This not only enhances visibility but also enables organizations to track patch SLAs, measure performance, and triage alerts based on business impact.Tagging assets with the owner ensures accountability, a key requirement in regulated sectors such as finance and government. When implemented correctly, these core tags significantly reduce MTTR (Mean Time to Remediate) and improve collaboration between SecOps, infrastructure, and development teams.

After determining which tags provide the most context, the next step is to automate their application as early as possible in the asset lifecycle.

Automate tagging during provisioning

Manual tagging is unreliable and difficult to scale. Use automation tools like Terraform, AWS CloudFormation, or Ansible to enforce tagging standards at the time of deployment. For example, teams can use a Terraform module, such as the one shown below, to apply required tags by default:

module "ec2_instance" {

source = "./modules/ec2"

tags = {

environment = var.environment

owner = var.owner

criticality = var.criticality

asset_type = "server"

}

}

Tag drift is common in autoscaling environments. Integrating tagging into instance launch templates and autoscaling policies ensures consistency across ephemeral workloads.

Organizations should also embed tagging logic into CI/CD pipelines. For instance, a Jenkins pipeline can include pre-deploy checks that validate tagging conformance. AWS Config and Azure Policy can provide continuous evaluation to ensure newly created resources remain compliant. By shifting tagging enforcement left into infrastructure provisioning pipelines, organizations avoid the technical debt of post-provisioning audits and reduce the operational risk tied to untracked resources.

Use built-in tagging features when asset tagging in vulnerability scanners

Once automation is in place, it’s equally important to utilize the scanner’s native features for grouping and filtering based on tags. Many scanners offer native tagging capabilities. For example:

- Tenable.io uses Asset Groups with dynamic filters based on tags.

- Qualys supports Asset Labels, which can be used in dashboard filters.

- AWS Inspector leverages EC2 and Lambda resource tags directly.

However, these tools differ in syntax, tag logic, and depth. The comparison table below can help teams understand what’s possible with a specific scanner and where custom workarounds may be needed.

| Scanner | Tag limitations | Workaround |

|---|---|---|

| Tenable.io | No nested logic, limited filtering options | Create layered asset groups |

| Qualys | Limited boolean logic in filters | Use external inventory mapping |

| AWS Inspector | Depends on AWS tag consistency at source | Validate tags via Config rules |

Tags play a critical role in how vulnerability scanners define scope, group assets, and generate meaningful reports. For example, tagging assets by environment allows organizations to scan production systems more frequently than development or staging, balancing risk coverage with operational efficiency. Tags like criticality or system_type can feed into severity scoring models, ensuring that business-critical servers are prioritized over low-risk endpoints.

Tenable supports dynamic asset groups that use tag logic to determine scan scope and scheduling. A group filtered by env=prod and criticality=high can be scanned daily, while lower-risk assets follow a weekly cadence. Reports can then be segmented by tag attributes such as owner or business_unit, providing custom views for infrastructure teams, application owners, or leadership.

In Qualys, asset labels mapped to tags like compliance_scope or system_owner can trigger remediation workflows in tools like Freshservice. These workflows can automatically generate tickets with the right priority and route them to the appropriate teams, helping reduce manual triage and ensuring high-risk findings are addressed promptly.

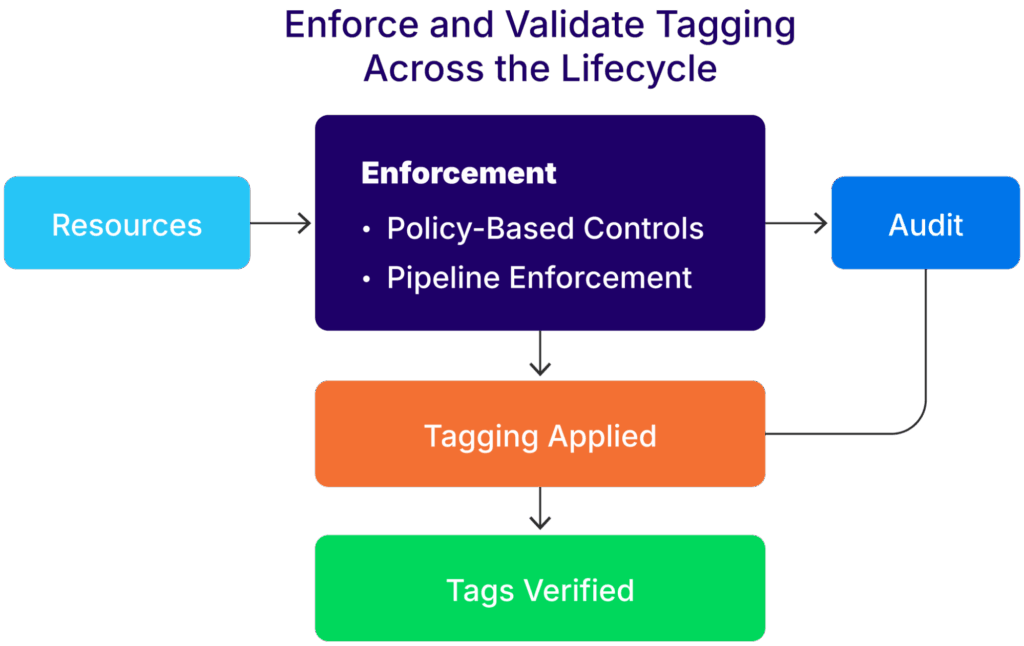

Enforce and validate tagging across the asset lifecycle

To maintain long-term value, these tagging practices need to be enforced and routinely validated across all stages of the asset lifecycle.Once tagging policies are enforced at the source, the focus of operational oversight must evolve. Rather than looking for untagged assets, audits should verify the accuracy, consistency, and relevance of the tags already applied.

Enforcement mechanisms

Common asset tagging enforcement mechanisms include:

- Policy-based controls: Use AWS Service Control Policies (SCPs), Azure Policy, or GCP Org Policies to block the creation of resources that do not meet predefined tagging standards.

- Pipeline enforcement: Incorporate tagging validation in CI/CD workflows using IaC linters or custom pre-deploy validation steps in Terraform, Jenkins, GitHub Actions, or similar tooling.

An asset tagging validation, audit, and enforcement workflow (source)

Sample AWS SCP policy for enforcing tagging policies

The JSON below provides a practical example of an AWS SCP policy that engineers can use to enforce asset tagging policies.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Action": "ec2:RunInstances",

"Resource": "*",

"Condition": {

"Null": {

"aws:RequestTag/owner": "true",

"aws:RequestTag/environment": "true"

}

}

}

]

}

Audit focus areas

The four primary focus areas of an asset tagging audit are:

- Check for tag value validity (e.g., no free-text entries like “prod1” or “Production!” when “prod” is the standard).

- Verify controlled vocabulary conformance across business units.

- Identify stale or outdated tags, such as references to decommissioned teams or projects.

- Confirm that ownership and criticality tags reflect current organizational structure and asset importance.

Sample Python code to validate ‘owner’ tags against an approved list

The code below provides a simple example of how teams can programmatically validate tags. In this case, the 'owner' asset tag is being checked against an approved_owners list.

for asset in asset_list:

if asset['owner'] not in approved_owners:

print(f"Invalid owner tag: {asset['owner']} on {asset['hostname']}")

Key takeaway: audit to ensure integrity

Mandatory tagging improves coverage, but effective risk reduction depends on the quality of those tags. Shift auditing practices to focus on completeness, correctness, and contextual relevance, ensuring that the tagging framework remains a high-integrity component of your vulnerability management lifecycle.

With enforcement and validation in place, tagging becomes a reliable foundation for downstream reporting and risk analysis.

Integrate asset tagging with dashboards and risk reports

Tags become powerful when connected to risk dashboards and reporting tools. In Splunk or Tenable.sc, tags like business_unit, env, and owner allow segmentation by team, product, or severity tier. This enables:

- Executive reporting on patch SLAs by team

- Prioritization dashboards by criticality and exposure

- Ownership-aware metrics for accountability

Visual dashboards showing vulnerabilities by owner and criticality can drive immediate action during triage calls and monthly reviews. Tag-driven filtering also improves signal-to-noise ratio for analysts.

Organizations can also define remediation workflows that prioritize assets by tag attributes. For example, critical assets tagged with env=prod and criticality=high can be routed to an emergency remediation queue. Custom tags can be integrated with BI tools like Power BI or Tableau to generate reports for risk committees and board-level oversight. By standardizing tag-driven metrics, organizations gain transparency across their vulnerability lifecycle, from discovery to resolution.

Ultimately, asset tags only reach their full potential when they support measurable insights and actionable risk decisions across both security and business teams.

Common asset tagging pitfalls and remediation tips

Four common asset tagging pitfalls that can negatively impact an organization’s use of asset tagging in vulnerability scanner use cases are:

- Tag drift: Assets lose or change tags during lifecycle events such as rehydration, scaling, or manual changes.

- Inconsistent values: Different teams use different values for the same tag type (e.g., “Production”, “Prod”, “prod”).

- Lack of ownership: Tags like

ownerare left blank, making accountability tracking difficult. - Limited tool integration: Tags exist only in CMDB or cloud providers but not reflected in scanners or dashboards.

Fortunately, there are proven techniques for addressing these potential challenges, specifically:

- Automate tag enforcement in CI/CD pipelines using tools like Terraform, CloudFormation, or GitHub Actions to ensure tags are applied consistently at deployment.

- Define and publish standardized tag taxonomies with approved values, owners, and usage guidelines. Store them as policy-as-code where possible.

- Perform regular tag hygiene audits across cloud, CMDB, and scanner platforms to detect drift, gaps, or violations of naming conventions.

- Implement bidirectional sync between CMDB, cloud, and scanning tools to prevent tag fragmentation and stale data.

Conclusion

Tagging is more than just good asset management hygiene. It is a control surface that unlocks automation, accountability, and scalable risk management. A well-executed tagging strategy supports everything from triage and reporting to regulatory compliance.

Each best practice outlined above contributes to key business outcomes:

- CMDB alignment ensures accurate asset mapping

- A consistent taxonomy prevents fragmentation and miscommunication

- Tool-specific enforcement improves automation reliability

- Integrated dashboards enable executive visibility

Without consistent tagging, organizations struggle to prioritize vulnerabilities, assign remediation, or measure progress. With it, vulnerability management shifts from reactive to proactive.

Unclassified assets remain unmanaged and unprioritized. Asset tagging is a strategic pillar of any mature vulnerability management program. Organizations that treat tags as policy-enforced metadata, rather than optional labels, will gain a measurable advantage in scaling vulnerability operations, reducing the mean time to patch, and demonstrating audit readiness across frameworks such as NIST CSF, ISO 27001, and the Essential Eight. This approach strengthens operational efficiency and establishes traceable accountability across the asset lifecycle.