A Detailed Guide to Enhancing Data Center Energy Efficiency

Achieving high efficiency in a data center is a difficult task for both designers and operations staff. There are many pitfalls, and mitigating them requires attention to detail in planning and operation. Data centers are complex technical facilities and can almost be seen as living organisms, so specialized attention to individual components, their interaction, and planning for growth are constant concerns.

Data centers are currently responsible for about 1-2% of global C02 emissions. They have also grown dramatically in size: While a 1 MW data center was considered large a decade ago, today there are data centers consuming 500 MW and more, and sizes will only continue to increase. A concurrent trend is an increase in the number of smaller data center deployments, especially in proximity to industrial complexes to support the IoT paradigm.

Constant data center energy consumption increases and fluctuations in energy availability are occurring in a global context that includes the instability caused by supply chain problems, energy shortages, climate change, and inflation, just to mention a few. The use of energy has become more critical than ever, so it has never become more paramount to increase the efficiency of existing and new facilities.

The critical topic of efficiency is somewhat less challenging when it comes to creating new data centers because the designers are already likely aware of the issues at hand. Efficiency is a much more significant topic when it comes to existing facilities that have been relying on relatively old design paradigms and legacy equipment and infrastructure but need to consider IT loads with more demanding power and efficiency requirements.

Summary of key data center energy efficiency concepts

Unless careful attention is given to the optimal design and operation of the data center, the overall energy efficiency of the entire facility can be significantly compromised; this also means that the peak efficiency figures claimed by OEMs may not be met. The table below summarizes some of the essential considerations for ensuring data center energy efficiency that are discussed in this article.

| Concept | Description |

|---|---|

| IT load management | The processes running on specific components, such as servers or switches, need to be optimized to yield optimal facility-wide efficiency levels. |

| IT rack management | The placement of servers and switches on racks needs to be optimized to reduce imbalances at the rack level and maintain uniform power distribution across all racks. |

| Overall load staging and balancing | Proper component power loading requires monitoring and adjusting all processes and data throughout the active IT infrastructure. This needs to be looked at from the perspective of both current and future capacity. |

| Voltage level consideration | Appropriate voltage levels must be supplied from the point of connection (PoC) to the loads. |

| Choosing UPS systems | Uninterruptible power supplies (UPSes) can use a variety of technologies and operating modes, and it’s important to choose the right ones to ensure high efficiency. |

| Efficient lighting | Deciding on the right type of lighting, optimizing the placement of lights, and using smart sensors can all help minimize energy losses. |

| Choosing cooling technology | Free cooling and other technologies must be carefully considered for optimum efficiency. |

| Hot/cold aisle and containment | Dividing the data center into hot and cold zones and using containment can improve data center energy efficiency. |

| Use of waste heat | Data center heat has to be taken out from the facility, so it is a good idea to consider how to make use of it instead of just emitting it into the atmosphere. |

| Active monitoring | The need for quick decision-making and constant optimization of the facility requires constant monitoring and data gathering on all equipment, especially IT components. |

| Regular maintenance | Preventive maintenance activities enhance the efficiency and uptime of individual components in the facility. Regular site auditing and internal or external supports increase overall efficiency. |

| DCIM | Data center infrastructure management is a platform for data gathering and scheduling operational tasks to increase efficiency levels. |

Data center efficiency categories and best practices

The aspects of data center infrastructure that directly impact overall data center efficiency can be roughly categorized into four groups that are described in the major sections that follow. These are active IT load, electrical powertrain, cooling systems, and automation and monitoring.

Active IT load

Everything starts with the IT load, since that’s why the data center exists. Miscommunication between IT and mechanical and electrical (M&E) professionals will often lead to poor performance and problematic facilities.

It is essential to populate IT racks with active IT equipment using state-of-the-art accuracy to avoid imbalances. Active processes and applications running on the active IT equipment must be consolidated and properly managed.

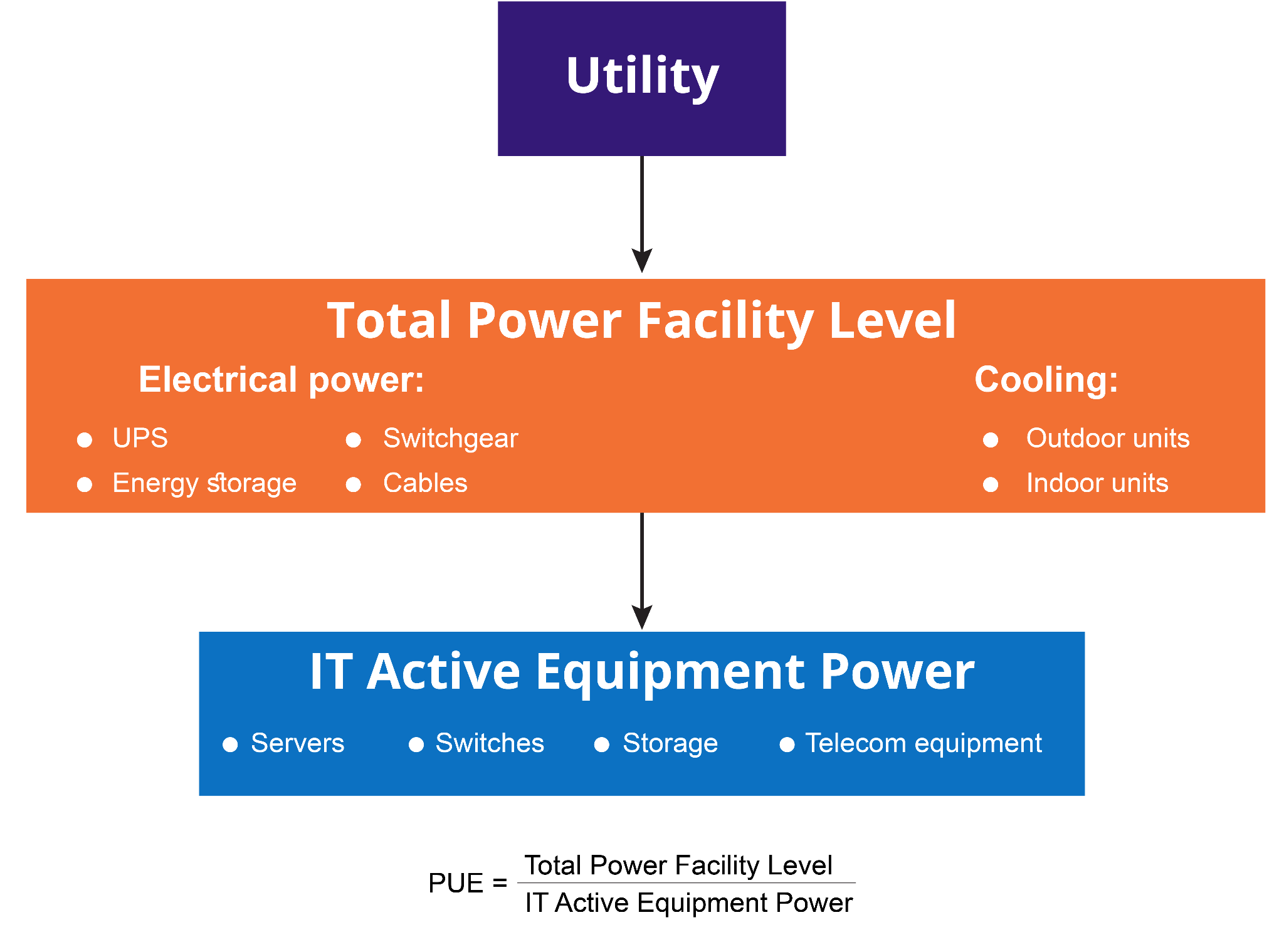

Power usage effectiveness (PUE) is an indicator of data center efficiency and is calculated as a ratio of the total electrical power consumed by the data center at the entry point to the average electrical power consumed by the IT equipment. The global average PUE is roughly 1.55, according to Uptime Institute reports.

Power usage effectiveness (PUE) calculation

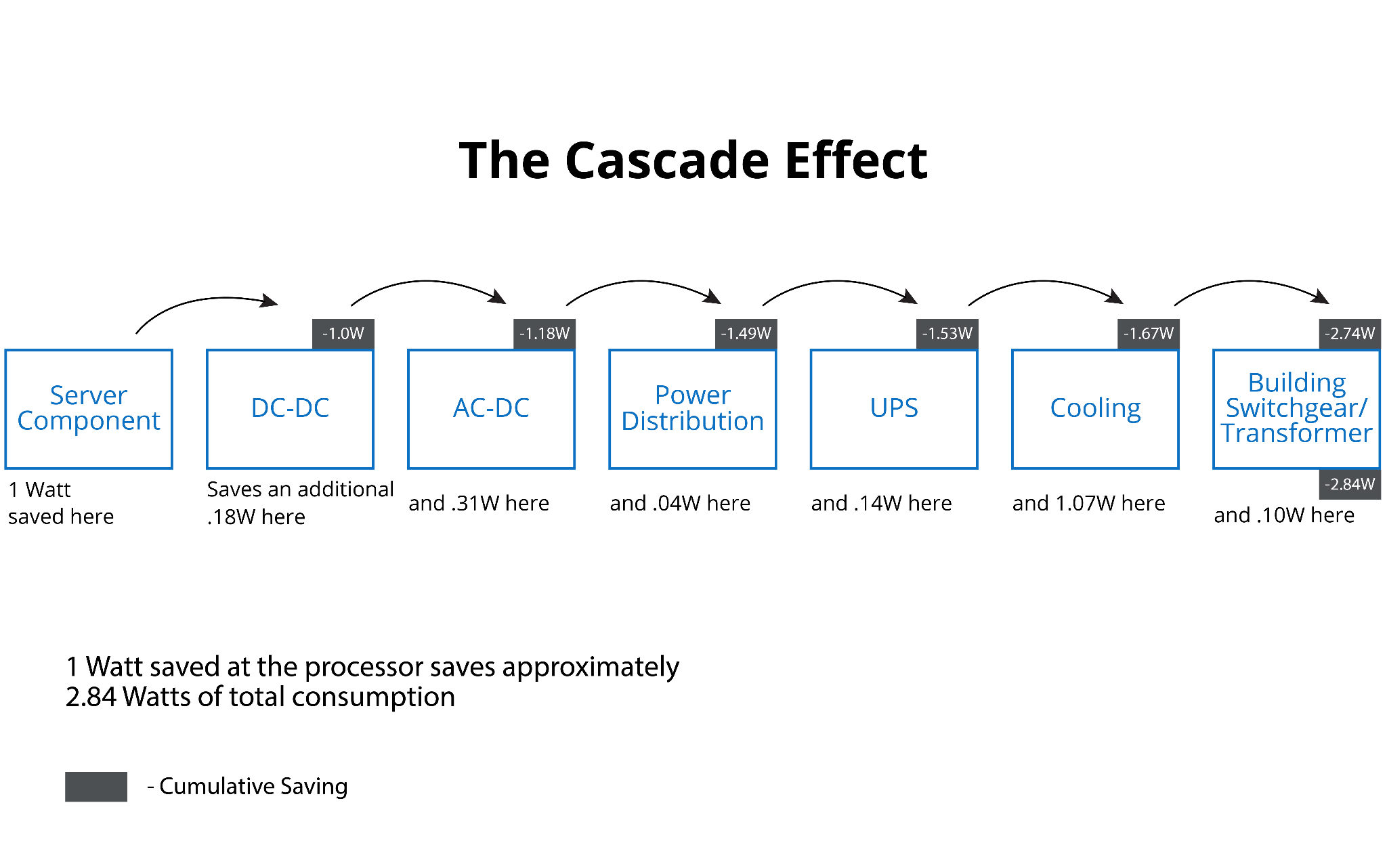

Choosing appropriate rack power density and balancing across all racks, rows, and halls is paramount to optimal efficiency of the supporting structure, power train, and cooling. It is important to note that every watt saved in the rack facility will save 1.55 watts on average, as mentioned above. However, in specific examples, the savings can be higher: The example below shows a “cascade effect” where the savings are much higher.

The cascade effect shows how power savings can “snowball.” (source)

IT load management

Effective IT load management is essential for ensuring smooth data center operation and high efficiency. Through IT load management, organizations can balance the utilization of servers, networks, storage, and other IT resources to prevent performance bottlenecks, minimize downtime, and maximize efficiency. This involves techniques such as load balancing, capacity planning, workload prioritization, and scaling resources based on demand to meet the needs of applications, services, and users while maintaining a stable and responsive IT environment.

Here are some specific best practices related to IT load management:

- Regularly perform system updates.

- Virtualize applications and consolidate them.

- Consolidate servers by migrating loads onto more efficient servers as appropriate.

- Maximize the use of storage by virtualization and networking functions by reducing broadcast propagation and employing advanced traffic balancing and adaptive routing.

- Consider appropriate power saving modes for every server type in the data center, based on specific needs.

- Use automatic discovery methods for all infrastructure layers.

IT rack management

IT racks are populated with stacked active IT equipment, such as servers; the space they require is based on their height, and the main unit of measurement is the “unit” (U), which has a height of 1.75” (about 4.5 cm). Devices such as servers and switches are typically 1-3 U in height, and, depending on their type and role, may be placed in different parts of the rack.

Rack power density has a significant impact on the overall layout and the appropriate cooling strategy, so data center hardware optimization starts with rack optimization. It is essential to plan carefully and distribute the servers across racks that form each row so that the cooling and electrical power operate optimally. This is important not only when the data center is created but also on an ongoing basis because a data center is not static: It features constant hardware updates and expansions.

An example data center rack showing several 1U, 2U, and 3U devices. (source)

IT rack management best practices include the following:

- Measure power consumption, ideally on the individual server level, utilizing smart metering.

- Assign devices to racks based on a thorough analysis of electrical and cooling considerations.

- Assess current requirements and plan for future requirements.

Electrical powertrain

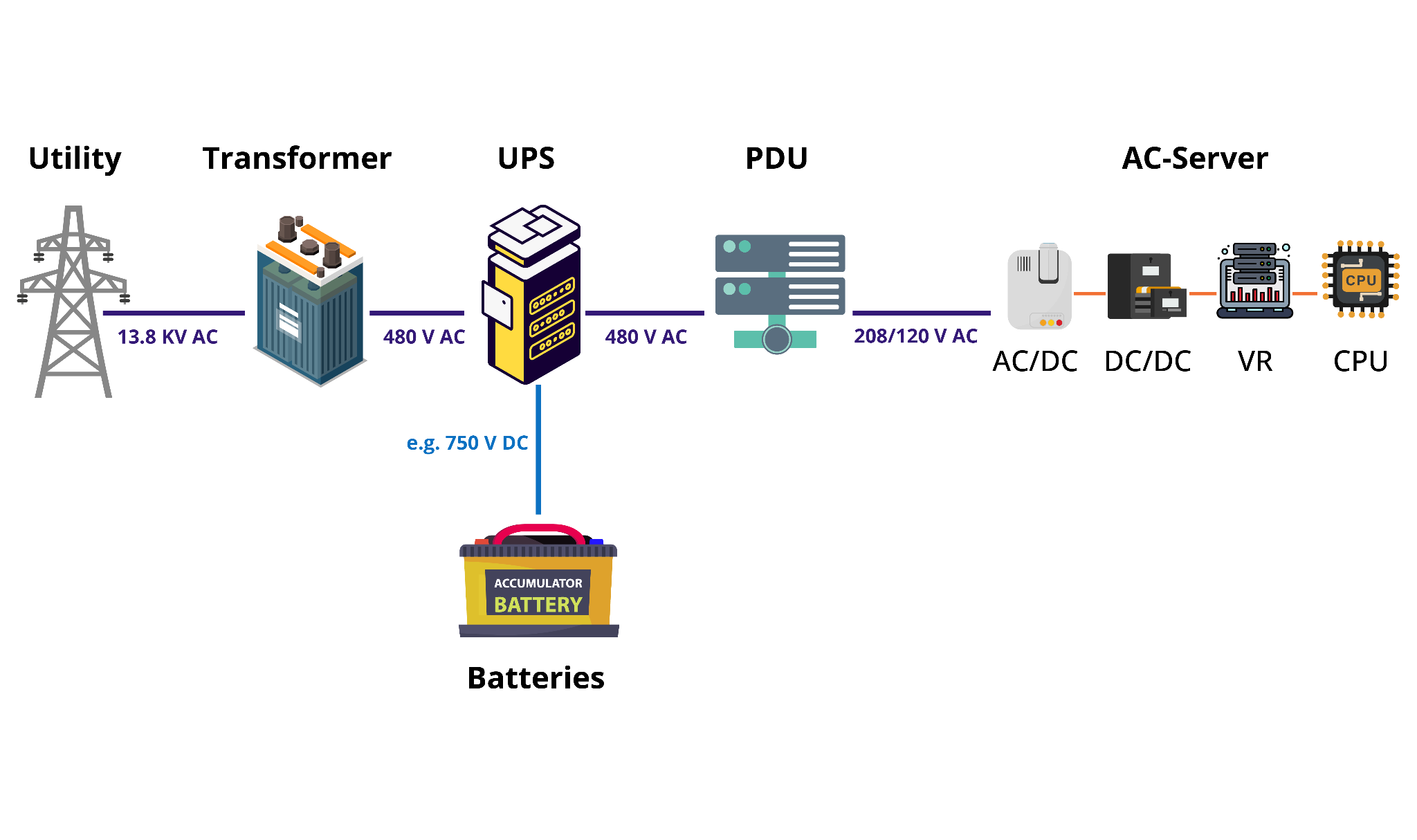

Long power distribution paths with many power conversion steps (especially at low voltage levels) can bring down overall efficiency levels. Choose appropriate voltage levels, depending on the utility PoC voltage, and consider stepping down to a low voltage as close to the load as possible without endangering safety. The correct choice of uninterruptible power supply (UPS) options based on required size, functionality, and redundancy will also impact overall efficiency at the facility level.

Electrical powertrain of a data center (source)

Overall load staging and balancing

Data centers are typically deployed modularly: Stages of deployment are used, which results in faster speed to market for the infrastructure owner and a quicker revenue stream. Although the methodology makes financial sense, it brings with it potential issues related to IT load distributed across the facility. Incremental deployments may lead to imbalances that can be potentially disruptive to operations and cause significant drops in efficiency.

It is recommended to plan and develop scenarios for load expansion:

- Implement a routine to validate the current load status of individual load groups.

- Design the system so that maintenance can be carried out without interruptions. In case of imbalances downstream, the system must be able to rebalance itself without causing interruptions to the load.

- Introduce within the organization a structured method that enables the tracking of IT components and enhances planning flexibility.

Voltage level considerations

During the design phase, the facility will interface with the commercial grid via a point of connection (PoC); the exact way this is done depends on the site location and the size of the data center. Since data centers are increasing in size, it is very common to connect a 100 MW data center to a high-voltage line on the transmission grid: typically 110 kV or even higher. Since IT loads (like servers or switches) nearly always use single-phase, low-voltage power (110 V or 220 V), the voltage needs to be transformed several times. Of course, local laws and safety regulations must be adhered to during the process.

Follow these efficiency-related steps when designing voltage regulation functionality:

- Consider reducing the number of transformer stages to avoid transformation losses.

- Use higher voltage levels for longer parts of the transmission path to reduce efficiency losses within cables (which increase at lower voltages).

Choosing uninterruptible power supply (UPS) systems

UPS systems are essential in data centers, not only to ensure continuity of service during outages but also to protect the load from interruptions caused by voltage surges and sags. UPS devices can be generally divided into two groups: static UPSes utilizing power electronic elements and dynamic (or rotary) UPSes that are based on mechanical inertia. The most common type in data centers today are the static ones, which are based on dual conversion topology, where power flows first through a rectifier and then output is produced via an inverter. This system also utilizes static switches to enable safety and, in modern UPSes, to yield higher efficiency. UPSes are typically configured in topologies to meet uptime requirements, so they often incorporate redundancy.

UPSes can operate in different modes: voltage-frequency-independent (VFI) mode, voltage-independent (VI) mode, and voltage-frequency-dependent (VFD) mode. The main differences between these is the efficiency of the different modes and also the risk of exposure to anomalies coming from the commercial grid.

| Mode | Typical efficiency | Characteristic |

|---|---|---|

| VFI | 93% | Load protected from voltage and frequency deviations |

| VI | 96% | Load protected from voltage deviations but not from frequency deviations |

| VFD | 99% | Load exposed to voltage and frequency deviations |

Despite these three modes being options in the UPSes used by all manufacturers, it is very common for operators to keep them operating in VFI mode only. This is often either due to contracts imposed by end clients in collocation spaces or because of personnel being concerned about risk mitigation.

Here are some best practices related to UPS systems:

- Size each UPS so that it will be at approximately 65-90% of maximum load, which keeps its operation in the optimal range of the efficiency curve.

- Consider using more efficient modes of operation, depending on grid power quality, load criticality, and end-client contracts (in the case of colocation).

- Conduct regular maintenance, including replacing components according to manufacturer specifications.

Choosing energy storage technology

UPS systems typically include batteries to provide initial continuity in the event of power failures, and most data centers have diesel generators for long-term outages. Energy storage systems are often the right choice for bridging the gap between these backup solutions: They often work in conjunction with UPSes to extend runtime before the generator must be fired up.

Energy storage comes in many different shapes and sizes, and the energy may be stored using a variety of technologies (chemical, mechanical, etc.). The most common variant used in data centers today is the chemical battery type, a maintenance-free technology that comes in two forms: valve-regulated lead acid (VRLA), the incumbent technology, and lithium-ion (Li-ion), which is gaining traction in the market.

Traditionally, energy storage systems have provided relatively short runtimes due to the expectation that a diesel generator would take over in the event of a prolonged failure of grid power. However, due to the increasing emphasis placed on sustainability and reducing greenhouse gas production, many companies are trying to minimize the use of fossil-fuel-powered generators. They are also increasingly interested in storing “green” power bought from the commercial grid via power purchasing agreements (PPA) for later use. As a result, the recent trend is toward higher-capacity energy storage systems; some even have enough capacity to run a facility for many hours, meaning that generators are only needed in extreme scenarios.

Follow these practices when it comes to energy storage:

- Decide on the right battery technology to meet the requirements of footprint, depth of discharge, autonomy, and power to provide the highest possible round-trip efficiency.

- Determine the appropriate capacity for the data center depending on its size, UPS systems in use, and desired runtime before it’s necessary to switch to a generator.

- Implement active monitoring to get information on the health status of the battery or other storage device and avoid hot spots that will reduce efficiency.

Efficient lighting

Lighting can be responsible for up to 3% of total data center power consumption. This number is relatively low, so it’s easy to overlook lighting while focusing on other infrastructure elements, but the total net power allocated to the lighting can still be significant.

Follow these steps:

- Plan lighting placement by using advanced software that enables the simulation of illumination and power consumption to provide the most bang for the buck.

- Align lighting with the aisles between rows to maximize illumination.

- Use automation so that lights turn off or operate in dimmed mode when no personnel are present.

- Use LED lighting to reduce both power use and heat production (further saving cost by reducing cooling needs).

Cooling systems

Because of the importance of cooling in data centers, choosing the right cooling systems and implementing them appropriately is critically important. Cooling system selection must account for factors such as ambient temperature and humidity conditions at the data center location, water supply availability, rack power density, and the total power of individual IT rows and halls, just to name a few. Important considerations include using hot and cold aisles, avoiding thermal hot spots, and using proper cable management to ensure good airflow while reducing pressure losses.

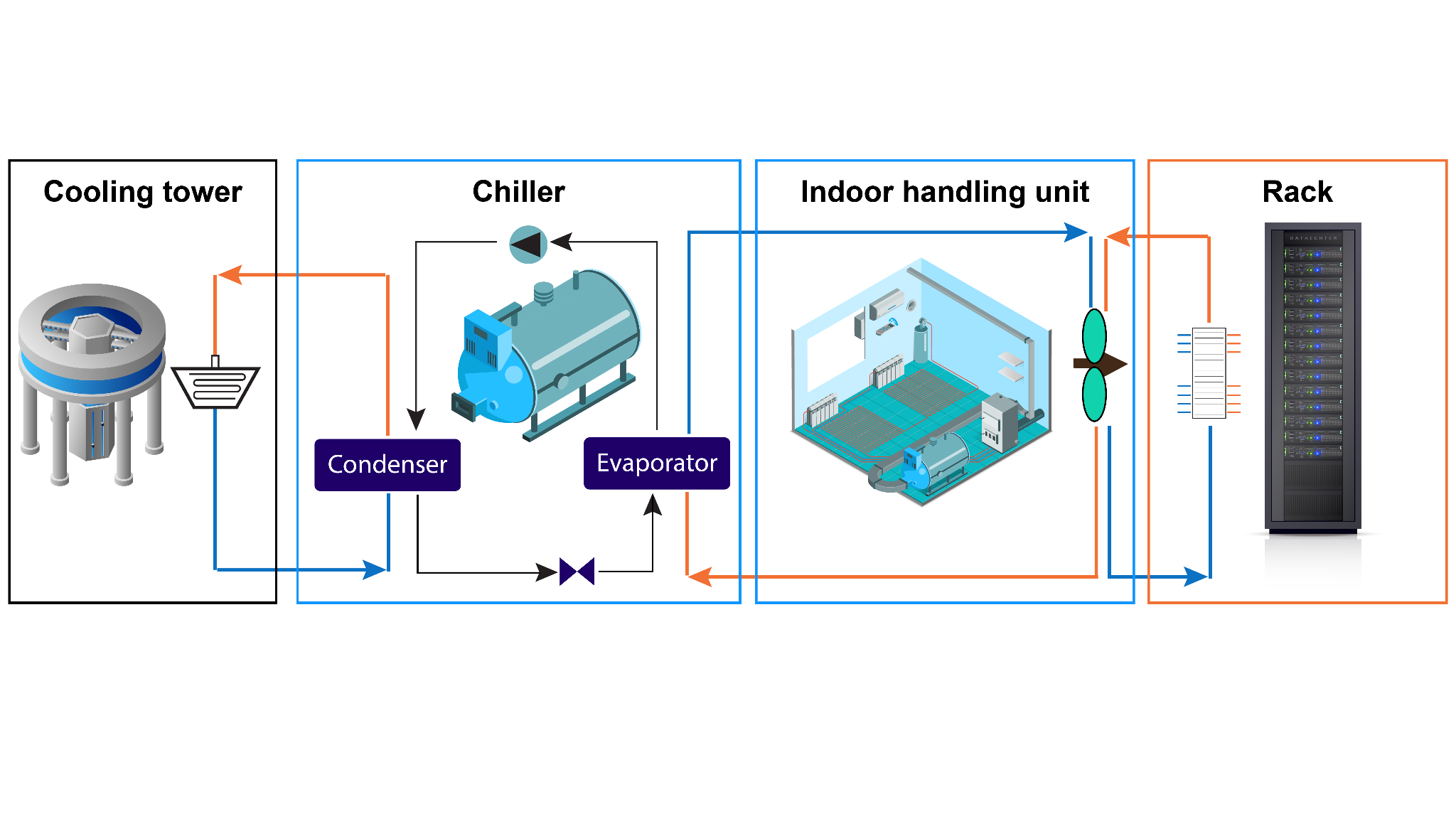

Choosing cooling technology

Everything starts with the design. It’s paramount to choose the appropriate technology depending on microlocation conditions and to properly design the overall layout depending on rack arrangements and equipment power densities.

There are many different technologies to consider, such as air and liquid cooling as a medium for cooling the load. Once the heat is taken from the load, it will be transferred to the outside via a medium that can be based either on water or refrigerant. The right choice will also depend on which external unit is used, such as a condenser, dry cooler, water chiller, or cooling tower.

Consider the following when optimizing in this area:

Consider the following when optimizing in this area:

- Be mindful of microlocation conditions when designing cooling systems. While averages can provide useful general guidelines, always adjust to the specifics of the climate where the data center is situated.

- Consider using liquid cooling techniques for higher rack power densities (20+ kW per rack).

- Design the system so that the impact of redundant systems on cooling needs is taken into account.

- Consider using free-cooling-based architectures (but also be mindful of water use).

- Follow a regular maintenance schedule and enforce traceability.

- Employ active monitoring and automation for more agility in constant cooling optimization.

Hot/cold aisles and containment

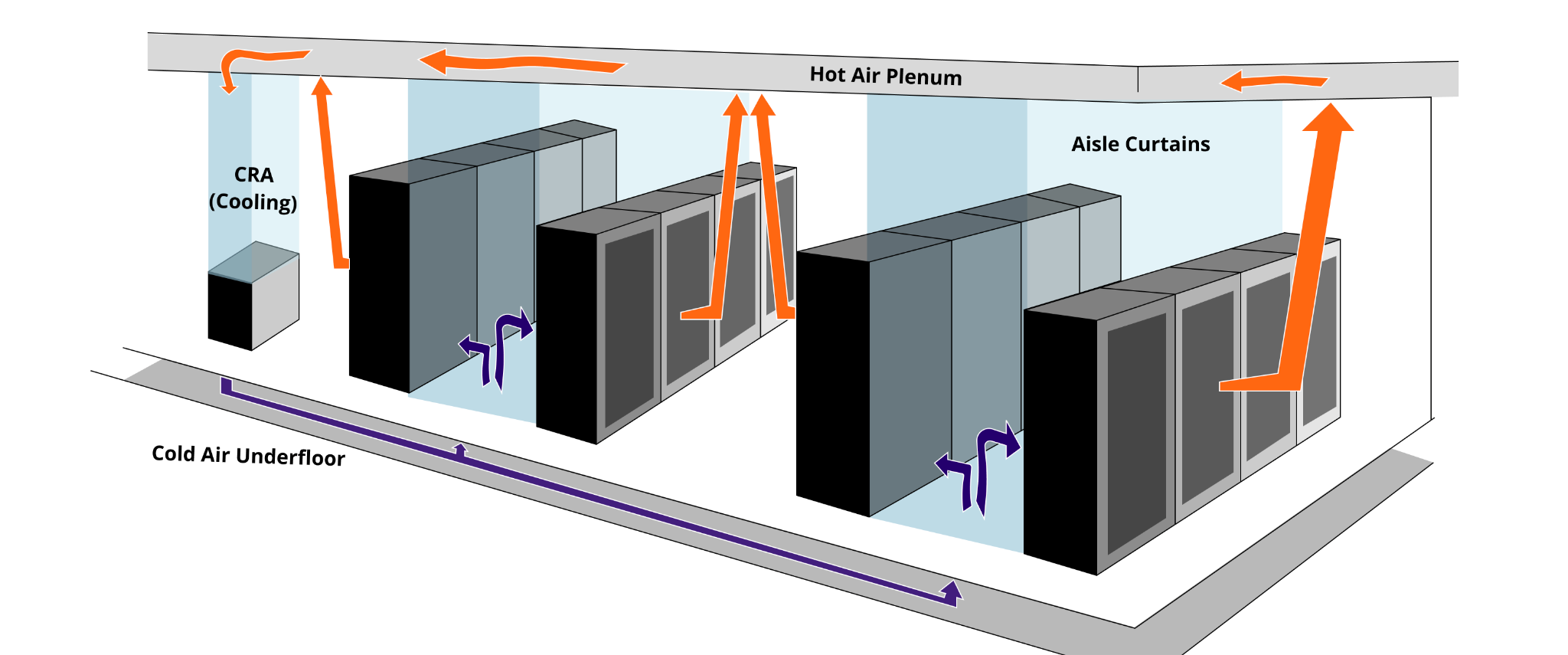

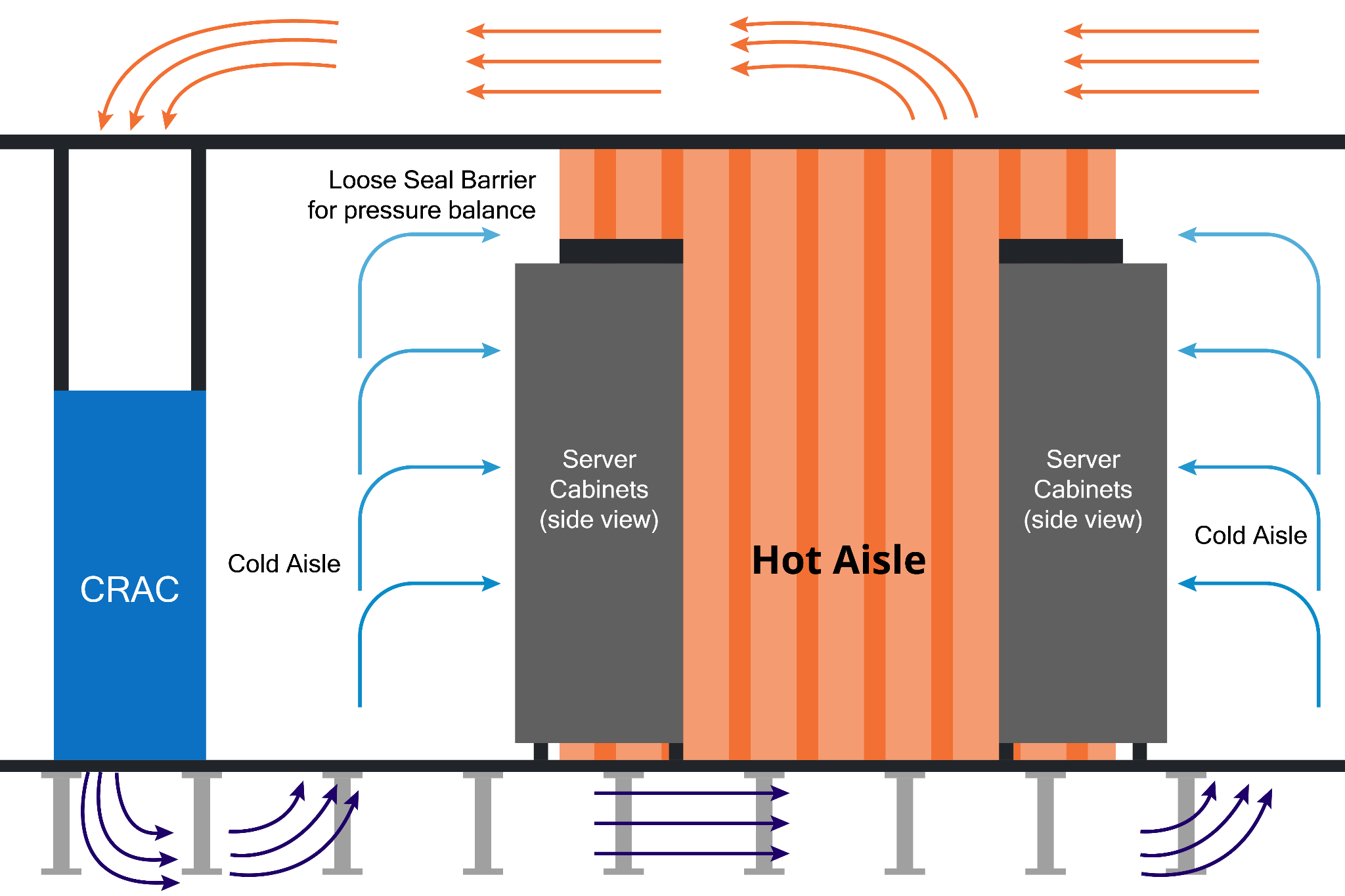

Servers and other data center hardware devices typically take in cool air from one side (often the front of the rack) and expel hot air from the other (usually the back). If the rows in the data center are all arranged in the same orientation, this creates an inefficient design because the hot air coming from one rack will be fed into the intake of the one adjacent to it.

A better solution is to arrange the server racks so that adjacent rows have their fronts and backs facing each other. Aisles where the fronts of the equipment face each other will be “cold aisles” where cold air is supplied for intake, and aisles where the backs face each other will be “hot aisles” where hot air rises to subsequently be cooled and recirculated.

In a hot/cold aisle design, cold air circulates under the floor and rises through perforated floor tiles in the cold aisles. Hot air rises from equipment through perforated ceiling tiles and circulates to the chillers through the plenum (in the ceiling). The diagram below shows this arrangement.

Data center hot aisle / cold aisle design

A hot/cold aisle design can significantly improve efficiency, but it is suboptimal because there is no physical separation of the hot and cold aisles except for the racks themselves. The racks are not completely solid and do not go all the way from floor to ceiling, which allows mixing of the hot and cold air, especially above and below the hardware units.

A further enhancement of the hot/cold aisle design is to add containment, which uses physical barriers to keep the hot and cold air away from each other. In cold aisle containment, barriers are used to segregate the cold aisles from the rest of the data center; in hot aisle containment, this is done with the hot aisle. Both techniques are effective, but they have tradeoffs.

Cold aisle containment is generally easier to implement, especially in existing data centers, because it is easier to control air coming from the floor (the cold air) than air coming from many individual devices. However, in cold air containment, most of the data center will become quite hot (because the cold air is segregated away). It becomes necessary to ensure that all equipment is operating at acceptable temperatures, and a hot data center can also be uncomfortable for human operators.

Hot aisle containment has roughly the opposite benefits and drawbacks. Segregating the hot air exhaust keeps most of the data center cooler than in cold aisle containment. However, it can be more complicated and expensive to properly capture and direct the heat exhaust from many pieces of equipment in a contained flow path.

Hot aisle containment (source)

Follow these recommendations to optimize hot/cold aisle setup and containment:

- Implement hot/cold aisle design.

- Choose hot or cold aisle containment based on an assessment of overall data center requirements.

- Position the cooling units at the end of the aisles to streamline the flow of cold air into the racks.

- Make sure all cover plates on the racks forming the aisle are in place to avoid pressure drops; ensure that all other openings are sealed.

- Place perforated tiles only in cold aisles and place perforated plenum tiles in the hot aisles.

- Do not place perforated tiles within 1.5 m of the cooling unit.

- Use sensors and controllers to coordinate cooling, heating, and humidity control.

- Be mindful of cable management by taking out unused cables and placing cables under the floor of the hot aisle when the overhead cable or bus management is not being used.

Use of waste heat

Depending on the technology utilized, consider reusing waste heat to enhance efficiency. Here are some examples of how this can be done:

- Use waste heat to keep the backup generator room and the generator itself warm to avoid cold starts.

- Use waste heat in combination with the absorption chiller (if free cooling is not a preferred method of cooling), as absorption chillers utilize a thermodynamic process that relies on absorption and desorption cycles to provide cooling, rather than compression. Using absorption chillers in combination with cogeneration power plants, such as gas turbines, can increase overall power-cooling system efficiency.

- Consider selling the waste heat to local businesses or homes to gain revenue; this will not increase the facility’s efficiency but will support sustainability.

Automation and monitoring

Any modern data center requires constant monitoring, data logging, and automated task scheduling. Consistently obtaining the right information and doing proper logging supports the data center operational staff in running the facility at optimal efficiency points. The application of data center infrastructure management (DCIM) is imperative to keep all information retrieved and structured for the use of on-site personnel.

Active monitoring

Active monitoring of all equipment is becoming standard operating procedure in modern data centers. It involves continuous, real-time observation of performance and events at the individual component level. Examples of the information gathered as part of monitoring may include server CPU usage, room temperature, network traffic, power quality from the utility, voltage levels at the UPS, battery temperature, firewall status, and much more.

Some components, like servers and network switches, already have the ability to measure some of these parameters. However, power and cooling equipment generally will require additional sensors to retrieve the necessary information.

The best practice is to collect all of the relevant information and use a good data center infrastructure management (DCIM) system to analyze it and make smart decisions. This enables real-time data center optimization processes.

Follow these approaches:

- Implement smart metering on all equipment.

- Consider monitoring power flow at the individual socket level.

- Integrate monitoring systems with DCIM.

Regular maintenance

There are two types of maintenance: corrective and preventive. Corrective maintenance occurs only when an unexpected failure occurs and typically requires very fast response times by both internal teams and external companies. Preventive maintenance involves routine inspection and correction of issues and potential problems before they lead to downtime.

Preventive maintenance is classically carried out at least two times a year, depending on the type of equipment. With the rise of machine learning, however, traditional preventive maintenance is more and more being replaced by predictive maintenance, where ML is used in combination with gathered data to determine optimal maintenance intervals.

Take these steps for maximum efficiency:

- Plan and log all maintenance activities.

- Keep critical components and inventory in stock on the data center campus to enable fast response by personnel in case of failure of components. This can enable much faster problem correction by both in-house and external technicians.

- Perform external site energy auditing once a year.

- Consider the possible use of predictive maintenance, or a combination of routine scheduled and predictive maintenance, as appropriate.

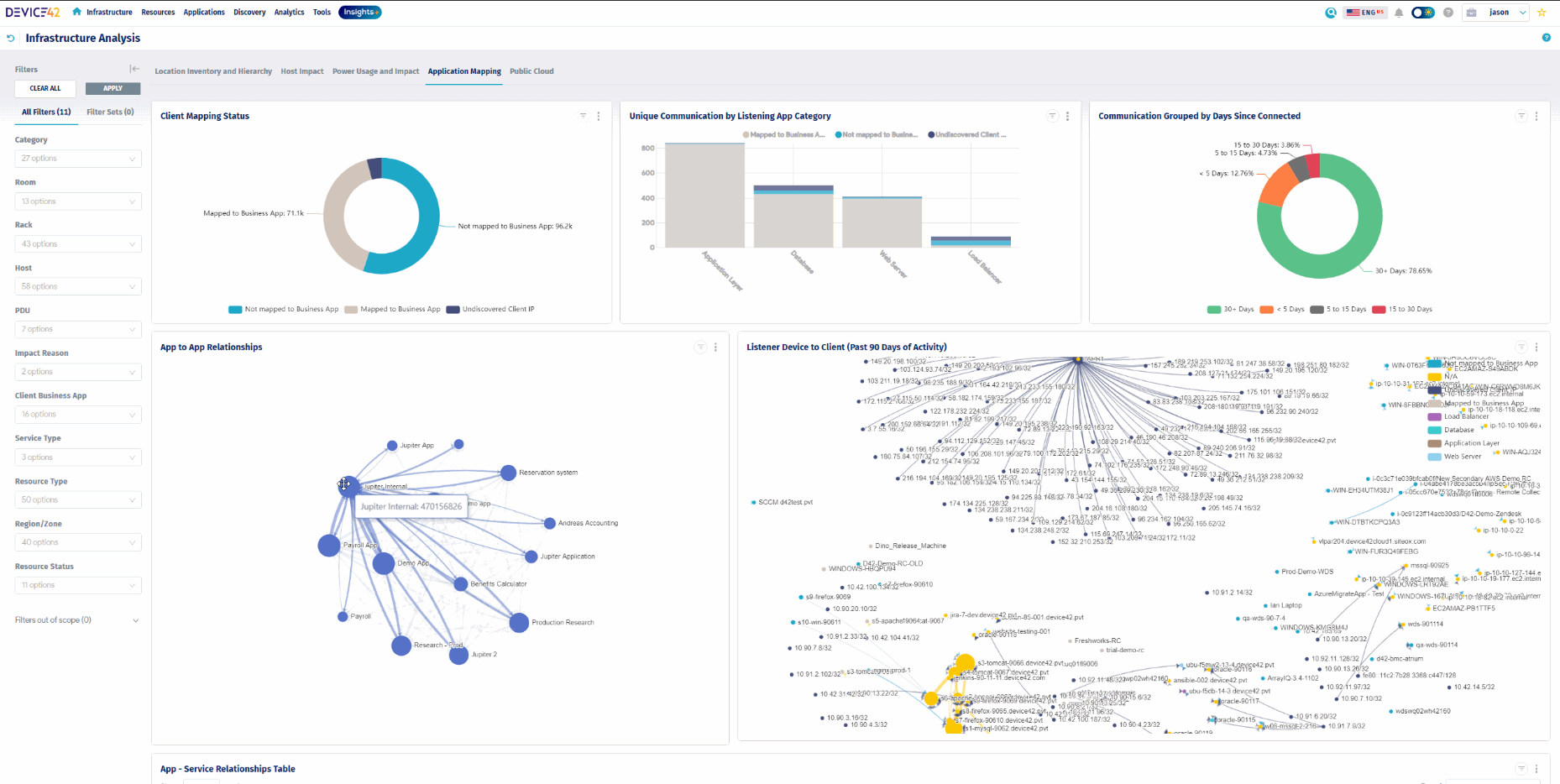

Data center infrastructure management (DCIM)

DCIM software has rapidly moved from the “would be nice” category to “must have” because modern data centers are too large and complex to manage without sophisticated tools. DCIM enables successful data center operation, combining IT management, facility management, and automation.

DCIM tools provide a single-point interface and management tool where all aspects of the data center infrastructure can be controlled, adjusted, and optimized. It allows for monitoring, measuring, and managing power use, cooling levels, space allocation, and asset utilization.

An example of a DCIM dashboard from Device42. (source)

Some of the best practices for applying DCIM include the following:

- Before implementing, be sure to scope out and define the requirements of the system. These tools are very complex and some can have a significant number of different modules, so it is prudent to decide which ones you need in order to manage cost and ensure that the system is user-friendly.

- Consider solutions based on your requirements for scalability, flexibility, ease of use, and integration.

- Ensure that all automation parameters are set up properly.

- Make sure all data is up to date.

- Implement appropriate procedures and train personnel to assure consistency and accuracy in data management across the entire organization.

- Monitor, analyze, and report data regularly.

Relevant standards

Some of the most important standards, guidelines, and best practices for improving data center efficiency are as follows:

- ASHRAE Standard 90.4

- Energy Star for Data Centers

- The Green Grid

- ISO/IEC 30134 series

- TIA-942

- Uptime Institute Standards

- BICSI-002

- European Code of Conduct for Data Centers

- TSI-EN50600

Conclusion

Data center operation is a multidisciplinary task that involves many different stakeholders, such as IT engineers, electrical and mechanical engineers, and other professionals. In the sections above, we laid out four important parts of the infrastructure: IT load, electrical, cooling systems, and automation and monitoring.

Since everything starts with the IT equipment, it is important to improve the efficiency of IT loads, which will lead to cascading savings across the entire infrastructure. The electrical powertrain is a discipline that requires balancing efficiency and uptime, necessitating careful design. Cooling systems are responsible for much of the power use in a data center, so efficiency is particularly important here; optimization to reduce the use of power can be achieved through the smart selection of cooling technologies and the use of containment techniques. Active monitoring and automation provide relevant information about the whole data center and its components to ensure an accurate understanding of system state and enable continual improvement.

Finally, data center infrastructure management (DCIM) ties everything together. The main goal of DCIM use is to streamline all of the information gathered from the many different components of the data center, so it can be analyzed to enable decision-making to improve efficiency as an ongoing process.