A Free Guide to Data Center Management

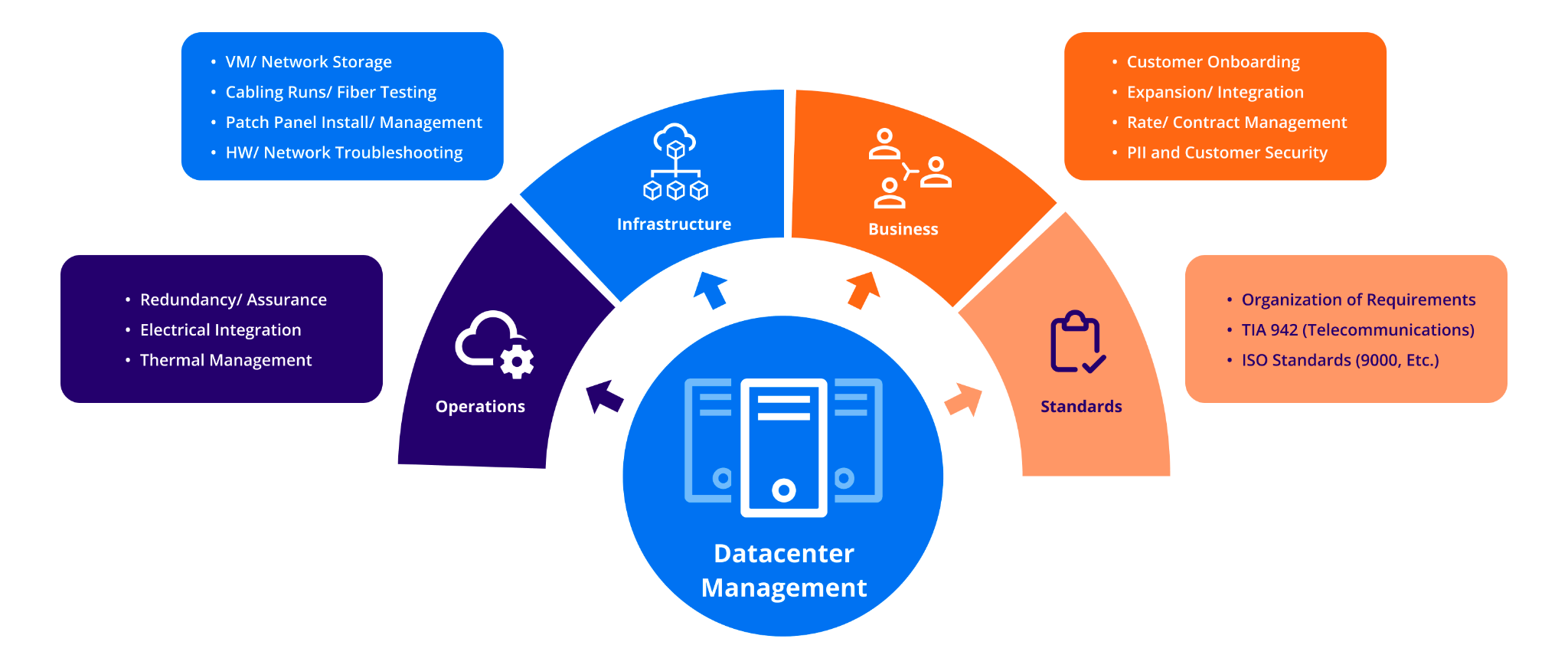

Data center infrastructure management teams (DCIM teams) are responsible for the electrical, thermal, and network management of a data center. Depending on the use case for the data center, a management team may require a variety of skill sets and broad technical expertise to provide the necessary maintenance support.

In some cases, the DCIM team will also be arbiters of operational, environmental, and security standards that are enforceable via host/tenant agreements that define the layout of specific racks or floorspace in a data center. In other cases, a DCIM team will assume a business management role, looking to acquire more tenants and provide services catered to ensure customer satisfaction.

Executive summary of data center management

No two data centers are exactly the same, with the roles and responsibilities associated with the management of these facilities nearly as varied. That said, managing a data center fundamentally relies on both the individual members of a data center management team and the clear definition of a statement of work. Almost all data centers will require a team that is skilled and experienced in the management of electrical, thermal, and network systems. Using the lens of these three main topics, three specific arrangements will be identified and the roles and responsibilities of a DCIM team for these cases will be discussed.

| What is data center management? | Data center management is the organization of roles and responsibilities for a DCIM team to maintain the operations of a facility. |

| DCIM team requirements largely consist of the associated needs of the electrical, thermal, and networking infrastructure subsystems of the data center. | |

| Electrical infrastructure | Power systems, constituent cables/outlets, and redundancy systems |

| Network infrastructure | Local networks, including routers and switches, data storage, timing systems, and firewall security |

| Thermal infrastructure | Cooling, heating, and overall environmental management of a facility |

| Arrangements | The three arrangements we will discuss are individual owner, colocation (COLO), and nested/federated data centers, each of which involves unique DCIM team tasks. |

What is data center management?

A data center is functionally a physical location where data can be stored, processed, transmitted, or hosted. Data center management ensures that all the tasks involved in operating such a facility can be performed effectively and mitigates operational risk by setting strict policies and procedures for operations and maintenance (O&M).

The process by which a data center is managed depends on the specific use case of the data center. Each use case will impose specific needs on a DCIM team and will often directly influence the required skill set of a team. Three examples of data center arrangements, as identified by the ownership organization, are individual owner/operator, multiple colocated users/customers (COLO), and nested or federated systems within a larger facility. In general, these organizational structures can be understood in terms of scale, customer/tenant agreements, and overarching policies or standards applied to users.

In each of these cases, the rules, requirements, and expectations of a DCIM team are unique and varied, but there are several requirements that are consistent throughout:

- Maintaining or installing thermal, electrical, and networking systems

- Maintaining system operations via operational redundancy

- Providing various levels of tiered troubleshooting support

Thermal, electrical, or networking maintenance and installation, grouped roughly by individual infrastructures, have their own sets of requirements on a DCIM team. Redundancy and necessary levels of tiered maintenance support are often byproducts of both the data center use case and the specific hardware/software components of the system. In other words, the infrastructure of the data center and what the data center is used for will dictate the level of redundancy and troubleshooting support required by the DCIM team.

Major data center infrastructure subsystems

As mentioned above, the three main types of infrastructure in a data center are electrical, thermal, and network. Each arrangement will involve a different expectation of support for each of these subsystems, ranging from the actual architectural design to high-level maintenance. That being said, an overview of the types of requirements and constraints associated with these systems will serve to illustrate the need for a proficient DCIM team and demonstrate why clear scope definitions are important during DCIM team inception.

Electrical infrastructure

Support of the electrical infrastructure often necessitates a working knowledge of the electrical system, from the grid down to individual circuits. Strict policies for power layout and overall system loading are fundamental to all data center configurations.

Depending on the arrangement, data center management teams are responsible for establishing a redundancy plan that may incorporate in-rack equipment, alternate feeds/circuits, and/or an uninterruptible power supply (UPS). Lastly, a DCIM team will often be required to monitor existing power/electrical systems for troubleshooting and maintenance.

Electrical utility relationships

The timing of when a DCIM team is brought into the fold will dictate the involvement/reliance on external utility grid stakeholders. This could mean interfacing with agents or developers working for a power utility or, more generally, managing the power bill/account.

At the inception of a data center, a management team may be involved to help plan out the power budget and the necessary grid-specific requirements. External transformers may be required to meet the total load of the system as well as on-pole capacitors, or even specifically sized power lines feeding the building. A DCIM team may then be involved in specifying transformers / power controllers within the data center, intended to separate voltage by phase or otherwise provide additional safeguards within the context of the facility.

Computer power control (CPC) devices can often serve this dual purpose by being a central hub for powering banks of racks or entire rooms/floors of a data center. CPCs can also be used as an effective monitoring tool because they can provide status to a central monitoring hub for DCIM team review.

Power layout and design

Utility grid capabilities and the overall power budget of the data center ultimately dictate the overall power layout and system loading policies. Layout may consist of determining what underfloor circuits are required or available for each bank of racks. This would include outlet types, voltage/phase types, and also any specific limitations on the total power budget.

Depending on the use case, some key considerations would include answering these questions:

- How many total racks will be used in this data center?

- Will the overall layout be budgeted for growth, and what growth rate is expected?

- What is the nature of the components in the racks in terms of load and source requirements? Is there a minimum requirement for the number of circuits per box/rack?

Power layout and system loading policies feed directly into thermal considerations that will be discussed later in this article. They are also related to redundancy requirements for individual racks and the data center as a whole.

Data center and rack redundancy

In any data center, configuration redundancy will need to be a primary consideration because the whole intent of a data center is to provide reliable data storage/processing capabilities. Redundancy can be described in terms of individual racks, banks of racks, and the data center overall.

Individual racks may have redundant or dual-fed power distribution units (PDUs) or automatic transfer switches (ATSes), allowing for the continued operation of rack components despite the failure of one source. Certain PDUs are also suitable for the control of multiple racks, typically described as banks of racks, because in low-load situations, a large PDU can source a substantial number of components. PDUs also provide the ability to remotely monitor or activate/deactivate, so if there is a system fault, power can be rerouted or shunted until the issue is resolved. ATSes, in contrast, provide automatic switching from one source to another should a fault arise, but they are almost always intended for a single rack or set of devices.

Depending on the total size of the data center, overall redundancy could take the form of multiple substations or external grid power sources. This would imply the availability of a switching program to reduce the impact of a large substation failure, a single component that could feed an entire data center, by making alternate substations available.

Uninterruptible power supplies (UPSes) can allow for continued operation in the event of a large-scale outage or fault event. Sizing a UPS would depend on the total expected load and the required bridging time before a resolution can be found. For example, if multiple substations are utilized, then the bridging period would be the switching time required to shut off the path from the failed substation to the new one, likely also including a buffer associated with the startup time of the most important equipment or rack elements. On the scale of a substation, a UPS would likely need to be in the megawatt range, but for an individual rack or bank, the UPS would be much smaller.

Electrical infrastructure maintenance

When it comes to maintenance structure or plans, a tier structure is often used to identify the scale or work or intervention expected by a maintainer. These tiers, escalating in significance as you increase the tier level, identify the roles and responsibilities of the maintainer and dictate the necessary skill level of the DCIM team agent.

- Tier 1: Simple Intervention. Tier-1 support does not require the disassembly or breakdown of any rack components. It does not require any specialized training or expertise, and the necessary consumables would neither be considered large replaceable units (LRUs) nor be tracked in a bill of materials or parts list. This tier encompasses components like screws, lightbulbs, air filters, and fans.

- Tier 2: Less-Complex Intervention. Tier-2 level support may be considered preventative or procedural, not requiring highly specialized operators/maintainers. Unlike level 1, Tier-2 may require some training in safety hazards, such as voltage training or familiarization with working in confined spaces or at heights. A maintenance schedule would also be required for Tier-2 support.

- Tier 3: Complex Intervention: Tier-3 level support requires specialized technicians who can diagnose and troubleshoot problems within a system. Tier-3 may also require modification or configuration of components that are directly related to the operations of a data center system. Tier-3 level support could also include LRU swapping as well as testing/verifying that the installed equipment meets all user specifications.

- Tier 4: Complex, Highly Important Intervention. Much like Tier-3 level support, a specialized technician would be required for this type of maintenance. Both for preventative and corrective maintenance, Tier-4 level support would essentially be on some of the most important equipment in the building. This may include the UPS required for system reliability or a server that is the primary storage device for a network.

- Tier 5: Vendor Intervention. Tier-5 level maintenance support would ultimately require the intervention of the product vendor that either delivered or designed the system equipment.

Depending on the use case, different tiers of support would be expected from the data center management team. In a host/tenant arrangement, the host DCIM team may not be responsible for the electrical status of individual racks, especially if those racks are not owned/operated by the host organization. However, the underfloor cabling, utility grid connection, and broad electrical status of the site would still fall under the host’s responsibility.

In any case, evaluating the need for intervention and the respective tier level of support would rely on monitoring tools. These may include simple methods, such as having fire alarms and security systems that can monitor activity in a data center. They may also involve more complex tools related to electrical, thermal, or networking systems specifically.

In terms of electrical systems, these monitoring tools may include voltage/current monitors on individual components or racks. More complex electrical monitoring may allow for the centralized control and operations of system electrical distribution (as described with the PDUs earlier), where the DCIM team would be able to evaluate the current state of the equipment and electrical systems.

Thermal infrastructure

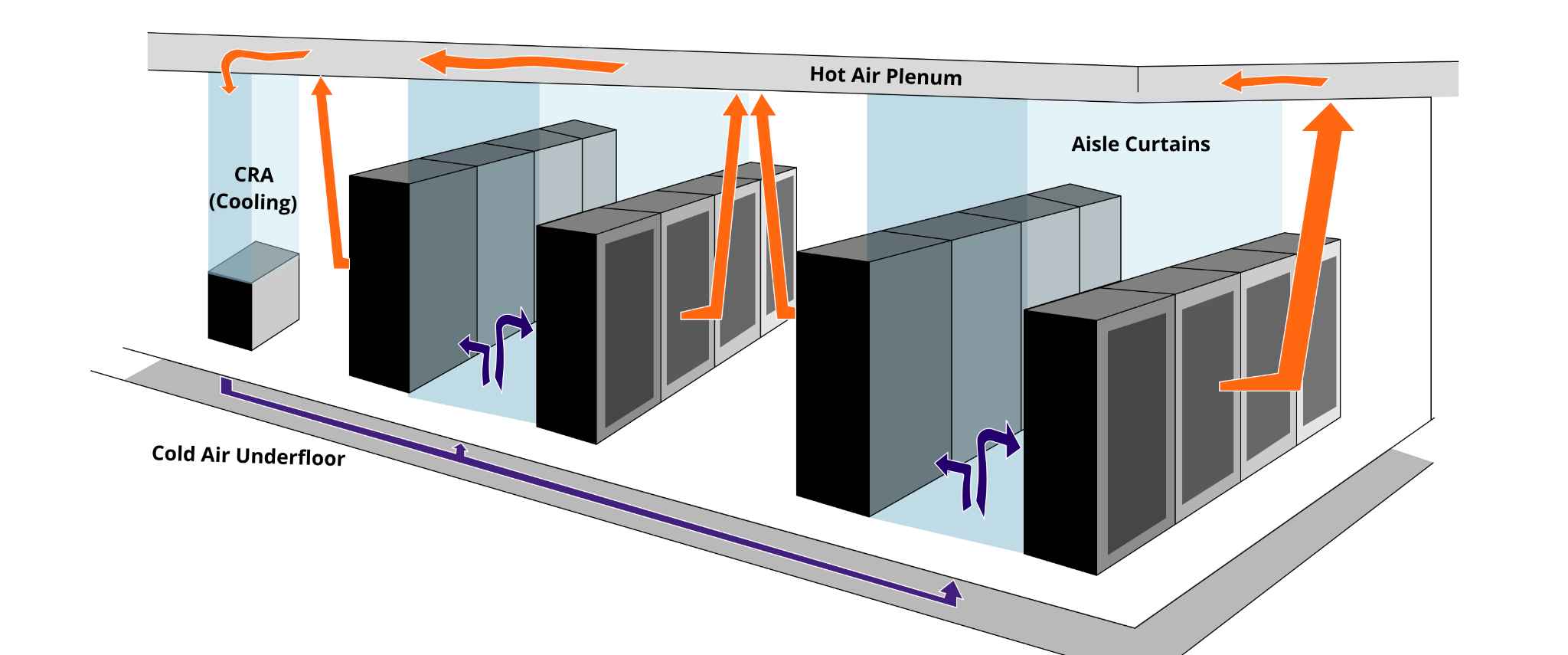

Support of the thermal infrastructure is connected to the specific layout of the data center. The most typical layout of a data center utilizes a raised floor/plenum arrangement. In this setup, the DCIM team would likely be required to design and/or manage the airflow and temperature characteristics of each rack or bank of racks.

With or without a raised floor, a data center will most typically be oriented in a hot-aisle / cold-aisle layout, as described below. A data center management team would be responsible for the maintenance of this layout and all associated requirements. HVAC install or maintenance would also be a primary requirement. Lastly, monitoring and tier support structuring would be a major consideration in terms of thermal infrastructure.

Raised floors / plenum

In most data centers, a raised floor is used to pass cool air under racks and up through vents on the tile floor. This is directly related to the hot-aisle / cold-aisle configuration that will be described next, but the installation and maintenance of a raised floor would require both the necessary tiles and physical hardware and an overall understanding of the available air pressure in the system.

In a raised floor system, there are large cooling systems that recycle the air that has been warmed by the rack equipment and cool it, forcing it underfloor to be withdrawn again. The pressure created by the cooling system must be balanced with the design of the venting egress points—specifically, the number of vents, the diameter of any openings / conduit portals, and the total volume of the underfloor region vs. the open-air volume of the data center.

The plenum, or upper ceiling area of the data center, serves two main functions. First, the plenum will store all air handlers for the HVAC system and can also factor into the pressure equations for the total flow rates of the underfloor systems. The plenum will also house all fire-protection equipment. In terms of air handling, the plenums will likely be fitted with all necessary filtration devices to ensure that the environment is clear of harmful debris or pollutants. There will likely also be a set of vents to either receive the warm rising air off the hot aisles or otherwise to direct it back through the cooling mechanisms in place for underfloor cool air travel.

Hot aisle / cold aisle

As stated previously, the hot aisle / cold aisle configuration is a typical layout for data center racks because almost all hardware components have internal cooling mechanisms to allow air to flow across high-load computational devices. Servers or other data processing equipment will therefore have a “cold” side where cool air is expected to be available to the rack and a “hot” side where the warm air can exit.

Regardless of the arrangement, the host DCIM team will be responsible for establishing the set aisles in the data center, ensuring that no individual rack would change the designated temperature differential between the two aisles and that the differential is maintained by effectively sequestering the two air channels. Plastic walls or curtains are typically the go-to solution for physical demarcation of the aisles, though a more simple approach of ensuring all racks (and composite equipment) are oriented in the same direction can also be used. Tenant DCIM teams will likely only be responsible for meeting the specified layout or policy, though in the case of a nested data center, thermal load will be gauged by the tenant team on a larger scale.

Heating, ventilation, and air conditioning (HVAC) system

An HVAC system is a necessary feature of any data center. The specification, installation, and maintenance of an HVAC system is typically the responsibility of a host or individual owner/operator data center management team. It is sometimes the case that this responsibility falls outside the realm of the DCIM team, but because HVAC is so central to data center management, the DCIM team will either be fully responsible for design or play a large role in determining specifications.

Specifications may include the air flow rate of ventilation and air conditioning systems, which are often dependent on the total exhausted heat from each rack. Another example may be the filtration requirements for air handlers, which have to comply with whatever ISO standards the data center management team are following.

Thermal maintenance

As with electrical maintenance, thermal maintenance may utilize a number of sensors and monitoring tools to ensure that the composite systems are operating correctly. In terms of the required tier support for each use case, maintenance staff and the DCIM team of the host data center will be responsible for tiers 1-4 and would need to organize tier-5 support for consumer off-the-shelf (COTS) components. This would mean that specialized technicians should either be available in the DCIM team to troubleshoot and repair all thermal issues discussed in this section or should have a mechanism to call for external support from product vendors.

Network infrastructure

Support of the network infrastructure of a data center depends on the required network types and signal/data types for data storage, processing, and transmission. Most data centers, if not all, will require some local network for data transmission across rack components. This may take the form of physical Ethernet cables connecting all relevant devices, centered on some localized hubs or switches. It could also be implemented via wireless networks, cloud-based or otherwise virtual, that allow for local transmission of data within the data center and beyond.

In any case, the maintenance or organizational responsibility of a DCIM team would center on specifying what is needed to operate these networks and then providing the tools/resources necessary to monitor and fix any issues that may occur.

Required networks for the data center

Networks within a data center may be defined, crudely, by the distinction between physical hardware (physical servers or storage) and virtual hardware (virtual servers or cloud storage). In both cases, there will be a combination of hardware and software that make up the communication grid and allow for operations.

Physical networks rely primarily on direct connections among boxes and racks, often centered on the use of large switches or hubs that can allow for the multiplexing of multiple digital data streams. Within the confines of Ethernet communication, this is achieved through various bus architectures (client/server exchanges) that allow for data to be transmitted and identified through the use of unique header formats or data structures.

With Ethernet communications, issues related to time delay, queuing, and EMI interference from external power/switching devices may be considerations for the DCIM team. Fiber optic connections allow for data transmission at much higher rates, and the technology is less susceptible to interference, but it involves some additional transceiver hardware that Ethernet does not.

The DCIM team in an individual owner/operator configuration or a host organization would be responsible for running all cables: under the floor or in cable trays overhead. The DCIM team also performs testing of all cables before/after installation and sets up the necessary shared switching/hub equipment to handle all data transfers.

In terms of virtual or non-physical networks needed in a data center, there are a number of important roles the DCIM team can play. One example would be balancing virtual storage and processing capacity of a host machine. This may take the form of setting a limit on virtual servers available per rack but also could include considerations like administrative access or identity policy audit (IPA) rules. Additionally, working out and tracking ports, firewalls, and shared protocols would be the responsibility of the network administrator in the DCIM team. File and data storage hierarchies, reconstitution/kickstart plans, and user accounts would also be managed by the team.

Required signals for data processing/transmission

In some situations, specific site timing or processing signals may be required for data processing/transmission. Some of the most used baselines include multiple types of Inter-Range Instrumentation Group (IRIG) standards, pulse per second (PPS), GPS, and oscillator-derived clocks. Depending on the requirements of the data center, any of these signals may need to be generated or received to provide a site standard by which other data may be processed or derived. Delivery and availability of these types of signals would be necessary for many system operations and, therefore, a direct responsibility of the DCIM team.

Arrangements

A data center can be defined, most generally, by the ownership and management of the equipment within the space. Since most data centers consist of a series of racks, the easiest way to explain an arrangement is to explain who owns the racks, a row of racks, a room in a data center, or the entire data center itself.

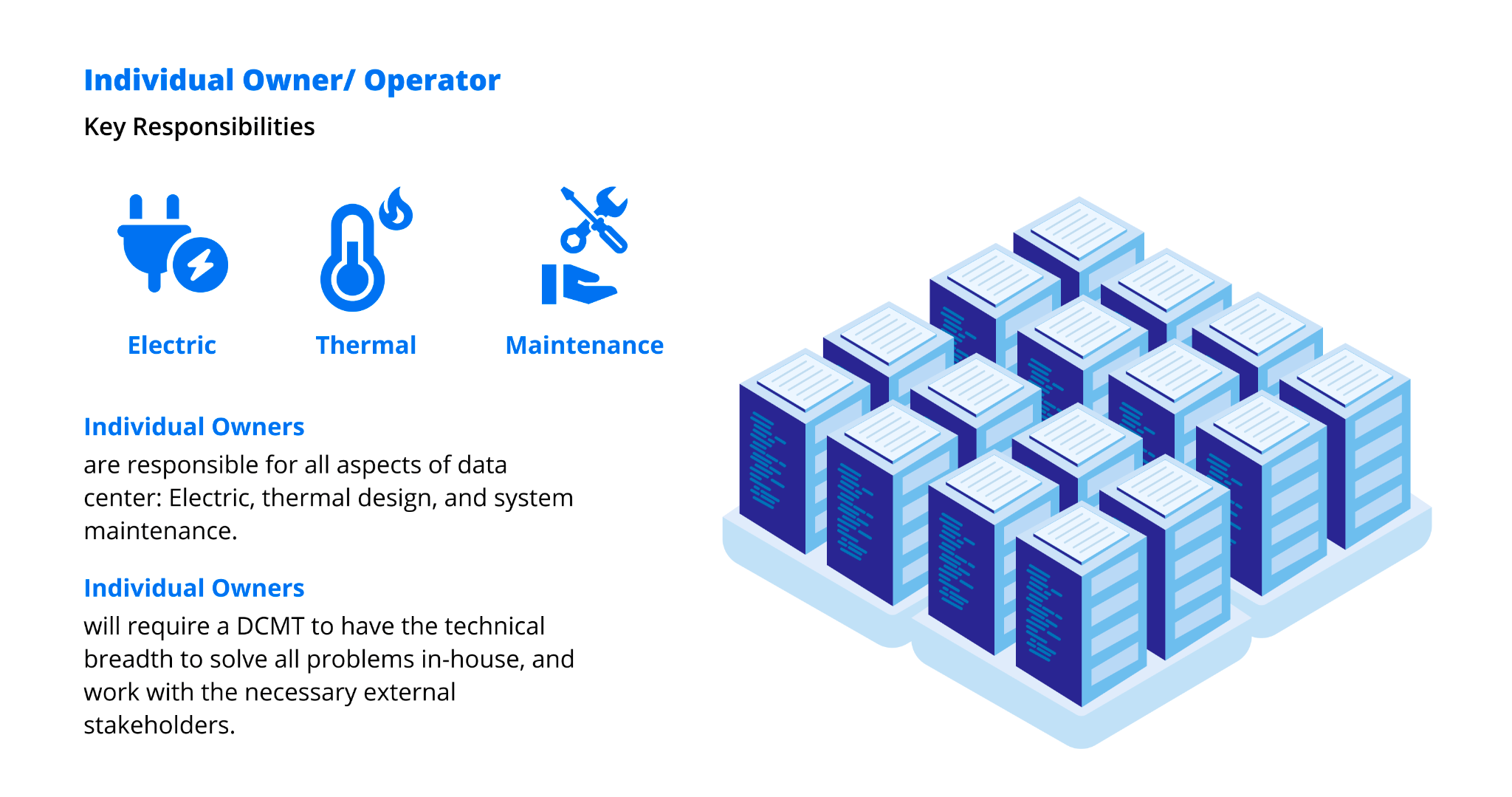

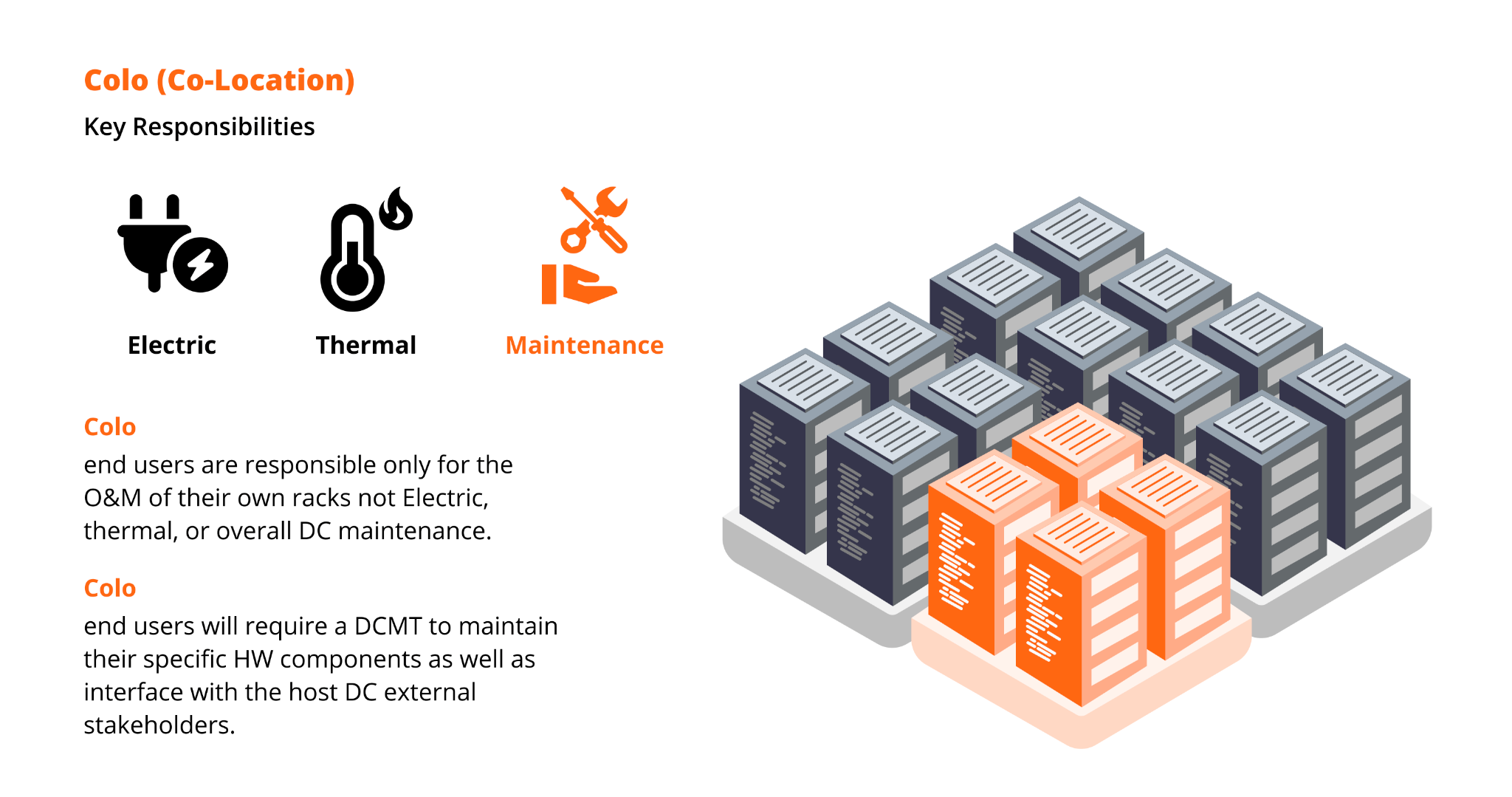

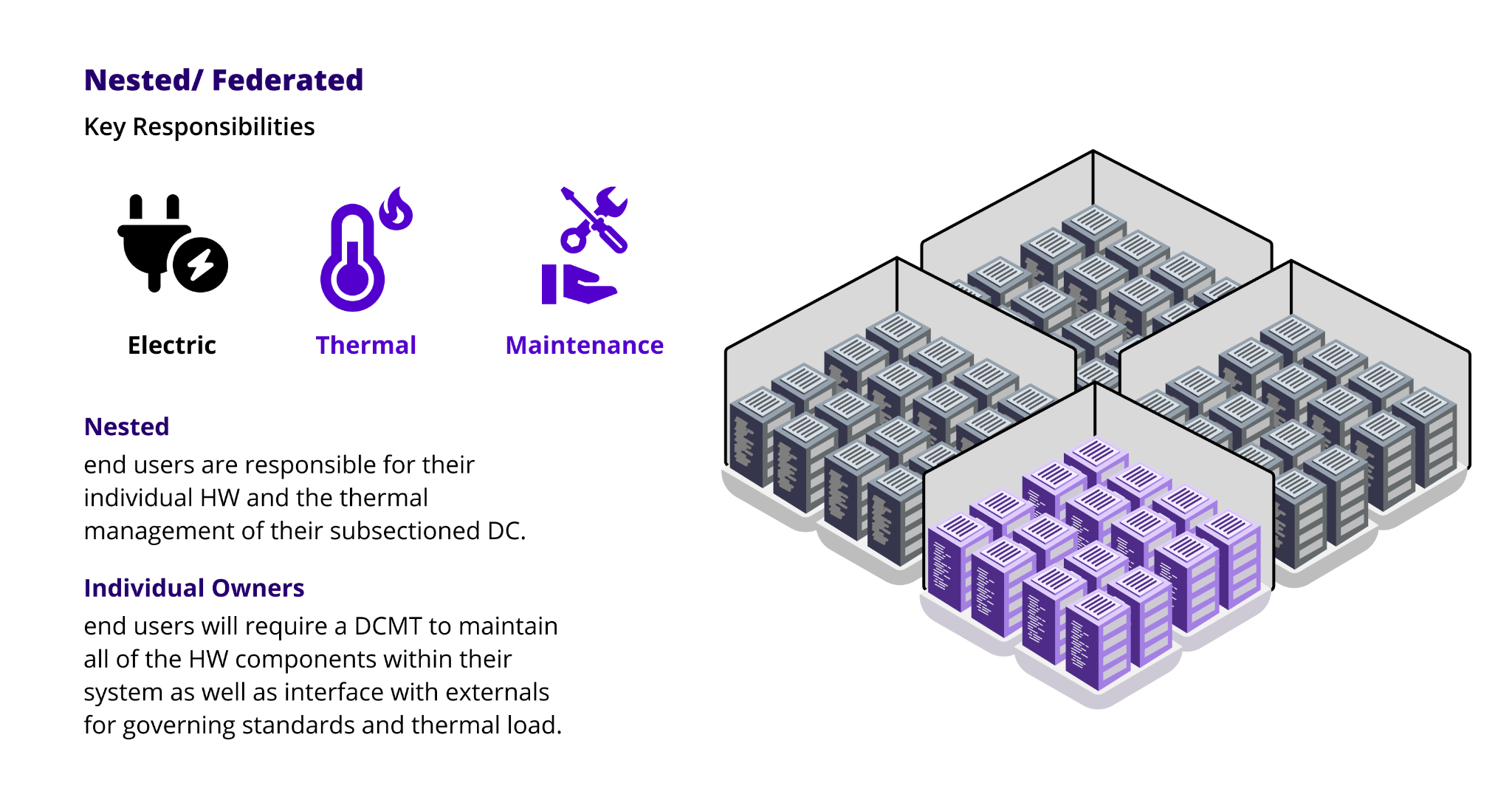

Individual owner/operator configurations have a single owner, meaning the entire data center is owned by a single entity. This would mean that all racks, walls/floors, and all infrastructure are the responsibility of a single DCIM team. In a colocation (COLO) type arrangement, there could be a single owner of the data center as well as different tenants/customers who have racks hosted in that data center. Each customer would likely have its own DCIM team team or otherwise would be reliant on the proprietor DCIM team for maintenance services. Finally, a nested data center has a similar structure to a COLO except that the data center at large would consist of a series of smaller rooms that operate as independent data centers, each with its own customers but linked by power and air handling. In this case, additional standards/rules would be required, organized by the larger host network.

Individual owner/operator

Data centers are often owned by individual companies, organizations, or entities. In this case, the networks are trafficked solely by the internal organization, while all hardware present is the responsibility of the DCIM team. While there may be a collection of consumer off-the-shelf (COTS) components, with their accompanying vendor support contracts, the DCIM team for this type of configuration must be able to juggle all the necessary technical problems with the logistical hurdles of managing a large information system.

COLO (Colocation)

In a colocation (COLO) arrangement, there will always be an individual owner/operator involved in the management and maintenance of the infrastructure. There will also necessarily be tenants or customers who have their hardware hosted in the data center. This relationship, typically a host/tenant arrangement, is what leads to the bifurcation between the host DCIM team and tenant DCIM team roles. These two teams almost always have unique roles and responsibilities, but it is often the case that the host DCIM team may provide a set of services that reduces the tenant workload as part of the rental agreement.

The key responsibilities for a COLO can be split along the same host/tenant lines, with electrical and thermal infrastructure maintenance supported by the host DCIM team. Technical troubleshooting of individual racks or rack components would almost certainly be the responsibility of the tenant DCIM team.

Rental arrangements

In a COLO configuration, the host DCIM team is often responsible for establishing a solid framework for customer acquisition and management. In this sense, the DCIM team would also operate as a business unit, balancing the specific technical needs and limitations of the host facility, the profitability of the rental arrangement, and customer requirements. Typically this would be related to standards of operations instituted to demonstrate a level of care that all customers or tenants would be entitled to and also be expected to maintain. Examples of duty of care may include enforcing rack power harmonics requirements to ensure that customer/tenant hardware does not destabilize the power provided by the host data center. Other examples may include security-hardened devices that would not allow for the possibility of network infiltration.

In most cases, the DCIM team for the host data center would take the role of warden of the host policy, but it would not be responsible for actually installing compliant hardware. That being said, certain reduced or extended arrangements could be made (based on the framework established by the host DCIM team) that may include additional tiers of maintenance support. An example of this kind of rental arrangement might mean a higher premium or price for additional levels of support: Tier-1 may be offered at a base price and Tier-2 or Tier-3 for a higher cost. Tier-4 and Tier-5 support would almost always fall on the tenant/customer, as these involve high-priority equipment and interaction with external vendors.

Common standards

Various commercial standards can be applied by the host DCIM team and would represent both a level of service/care expected by tenants as well as a tool to standardize the tenant experience. Examples may include International Organization for Standardization (ISO) standards; three of the more relevant published standards are highlighted below:

- ISO 9000 – Quality Management: Development and implementation of a quality management system (QMS)

- ISO-14000 – Environmental: Development and implementation of an environmental management system (EMS)

- ISO-27001 – Network Security: Implementation of security controls to mitigate risks/threats identified by the DCIM team

Nested/federated

As mentioned before, nested/federated data centers consist of a series of individual rooms or spaces that functionally operate as independent data centers. Like COLOs, the organization and operation of a nested/federated data center can be separated based on host responsibilities and tenant responsibilities.

The host organization will have a DCIM team that will need to be highly proficient in the implementation, tracking, and monitoring of site standards to ensure that nested tenants are compliant. Additionally, because nested data centers are often larger and more complex, the host DCIM team will also have to work closely with electrical and network engineers to ensure redundancy and security. In this way, the host DCIM team will have additional technical expertise in these fields but will likely not be responsible for the individual spaces or rooms the tenants occupy.

DCIM team delineation

While there are clear similarities between a COLO configuration and a nested/federated facility, scale is not the only difference between the two. In a nested data center, the tenant DCIM team is responsible for the individual rack maintenance and operation and, more importantly, also responsible for the entire space that they occupy. This would include the physical layout of the racks, the thermal load/organization, and even planning out future installations. The key responsibilities for the host DCIM team, in contrast, would only include systems that are shared by multiple tenants or constituent data centers. Most notably, this would include the electrical infrastructure, and it would be the responsibility of the host DCIM team to organize the utility grid power and provide large-scale UPS redundancy in the case of a fault.

That said, individual nested organizations (tenants) would likely provide their own backup systems referenced in the initial discussion on electrical infrastructure management, such as PDUs or ATS installs, in line with their own resiliency posture and requirements.

Summary of key concepts

A data center can functionally be described as a facility for the operation and maintenance of data storage, processing, and transmission equipment. Data center management is ultimately a set of roles and responsibilities taken on by a data center management team that are needed to ensure the operation of a data center.

Three specific arrangements of data centers that pose unique challenges and responsibilities to DCIM teams are an individual/owner operation, a colocated (COLO) space, or a nested/federated arrangement. In all cases, an effective and adequately trained DCIM team is essential to the operation of a data center, and determining the scope of work and thus the expectations of the DCIM team are just as important. It is incumbent on the organization itself, whether the owners/operators of a data center or tenants within some larger space, to evaluate the scope of DCIM team work and choose an appropriate management plan and team.