CMDB Architecture Best Practices: Aligning Design with IT Objectives

When a critical application goes down, the IT team will scramble to find the root cause, but they often don’t know where to start. This scenario is all too familiar for organizations that treat a configuration management database (CMDB) as a mere inventory tool or static list of assets.

This approach misses the mark. A CMDB should be designed to be a dynamic map of your IT landscape, revealing how components work together, identifying potential risks, and supporting proactive decision-making.

Achieving this unified view isn’t straightforward—it depends on multiple design considerations. In this article, we discuss the key considerations of a CMDB architecture that promotes visibility and agility while supporting your organization’s larger objectives.

Summary of key CMDB architecture considerations

| Consideration | Description |

|---|---|

| Data model flexibility | Define the extent to which the CMDB schema can be customized to accommodate unique organizational requirements and complex relationships among configuration items (CIs). |

| Data integration capability | Consider the ease and efficiency of integrating the CMDB with external data sources, tools, and systems—including APIs and data connectors—and compatibility with industry-standard protocols. |

| Data consistency | Ensure the accuracy and reliability of data stored within the CMDB, including mechanisms for data validation and normalization and enforcement of data quality standards to ensure consistency. |

| Governance framework | Adopt governance policies, processes, and controls within the CMDB to manage access, permissions, change management, and compliance with regulatory and industry standards. |

| Disaster resilience | Consider the CMDB’s capabilities for disaster recovery and business continuity, including data backup, replication, failover mechanisms, and recovery time objectives (RTOs) to minimize data loss and downtime. |

| Data storage efficiency | Measure the efficiency of data storage within the CMDB, considering factors such as data compression, deduplication, and optimization techniques to minimize storage requirements. |

Comparing CMDB architectures

The optimal CMDB architecture depends primarily on your organization’s size, complexity, and specific requirements. The key is to adopt a flexible architecture that can adapt as your organization’s maturity level evolves.

Though there are various deployment models, it’s important to remember that these models aren’t mutually exclusive. An organization’s use case may demand multiple semi-autonomous CMDB instances to maintain a comprehensive view of its IT landscape.

Some of the most common CMDB deployment models include the following.

Centralized vs. decentralized databases

- Centralized: A single CMDB serves as the source of truth for the entire IT landscape. While the model promotes consistency and eases global reporting, it can create bottlenecks if change control processes aren’t well-defined, and it requires careful design to scale effectively.

- Decentralized (aka federated): Multiple, potentially specialized CMDBs exist, sometimes managed by different teams. This approach is typically leveraged for flexibility and local control, but it risks data inconsistencies and makes holistic visibility challenging.

On-premises vs. cloud-based

- On-premises: Traditionally deployed within your organization’s data center. Although it offers full control over the environment, deployment demands upfront investment and in-house maintenance capabilities.

- Cloud-based: Hosted by third-party providers like Device42 and often provided as software as a service (SaaS). This model offers rapid deployment, scalability, and reduced infrastructure management burden.

Hybrid

A blend of the above, combining the advantages of both worlds. For example, a central on-premises CMDB for core assets, integrated with specialized cloud-based CMDBs for ephemeral workloads or SaaS environments.

Foundational capabilities of a robust CMDB architecture

Enterprise leaders frequently perceive the CMDB as a complex technical tool best handled by IT teams. However, the true value of a well-designed CMDB comes from its alignment with broader business goals. The following design principles help determine if your CMDB is capable of supporting strategic decision-making.

Data model flexibility

A well-designed CMDB is more than just a data vault. While one core aspect of a robust CMDB architecture is to ensure seamless interaction among the collection, storage, and access layers, the data model essentially defines the blueprint of CI information (hardware, software, etc.), attributes (model names, IP addresses), and how they relate to one another within the CMDB. These relationships can be hierarchical (parent-child), associative (many-to-many), or a combination.

Many seasoned architects confidently assert that the single most critical factor in building a robust CMDB is data model flexibility. That’s only partially true—the data model isn’t the only factor driving your CMDB’s agility—but it’s certainly one of the key factors.

If your IT estate hosts cloud-native applications and containerized microservices, a rigid data model would force it to operate in a limited way. A modular, extensible model adapts to these technologies, allowing you to add custom attributes and relationships specific to each technology. Imagine automatically tracking the ephemeral nature of containers or orchestrating updates based on dependency changes.

Why is this flexibility so important?

- A future-proof data model is fundamentally extensible, allowing for new data points and relationships as novel technologies emerge. This means the ability to track compliance-related metadata or calculate the total cost of ownership by associating licensing and support costs with assets.

- A flexible model can align with the Common Information Model (CIM) or similar standards, promoting seamless integration with monitoring, ticketing, and change management tools. This standardization can potentially eliminate manual data mapping and speed up response times.

- Beyond managing technology sprawl, flexibility directly impacts day-to-day operations. Consider being able to dynamically assign risk scores to assets, driving prioritized remediation efforts, or automating reporting for software license audits to reduce labor and ensure compliance.

Data integration capability

Enterprises that hastily adopt cloud services often find themselves with a fragmented view of assets across a hybrid estate. A cloud-aware CMDB is crucial for understanding dependencies and managing costs by identifying shadow IT and wasted resources. Leaders should demand CMDBs that embrace the ephemeral nature of the cloud. This means real-time discovery, visualization of autoscaling resources, and the ability to understand resource costs alongside on-premises infrastructure.

However, understand that auto-discovery tools can vary significantly in their capabilities. When evaluating CMDB solutions, carefully examine the breadth and depth of their discovery features. A CMDB with strong integration capabilities should allow your auto-discovery tool to pull and correlate data from external sources (monitoring systems, vulnerability scanners, etc.), validating information and enriching your CMDB with key insights.

Consider solutions like Device42, which offer a wide range of prebuilt integrations and powerful RESTful APIs to streamline the automated discovery of on-premises assets, dependencies, and usage patterns.

Strong integration capabilities streamline operations and accelerate IT initiatives in many other ways, too, allowing you to do the following:

- Undertake resource allocation, cloud investments, or software retirements based on actual numbers from your CMDB.

- Proactively mitigate risks by integrating vulnerability feeds with your asset inventories, targeting patching and remediation efforts based on the real-world impact on your critical systems.

- Onboard new acquisitions or sunset end-of-life infrastructure quickly by reflecting those changes accurately throughout the CMDB and connected systems.

Data consistency

Capturing basic CI relationships in their CMDB’s data model is important. However, the CMDB’s true power lies in leveraging advanced relationship types like “depends-on” and “impacts” for real-time impact analysis. (Imagine predicting the potential service disruptions caused by a hardware failure before it happens.)

Though, in reality, data is rarely “plug and play” between systems. Your CMDB should include tools for data normalization, cleansing, and bidirectional synchronization to prevent different silos of incompatible formats and languages. A robust data integration capability should employ prebuilt connectors and scripting tools to transform the data into a unified format, ensuring that it’s readily digestible and accurate before entering the CMDB.

Imagine every stakeholder in your organization, regardless of access point, having access to the same accurate and up-to-date information. When stakeholders trust the information they see, a natural reliance on the CMDB grows as a strategic tool. For absolute data integrity, enforcing mechanisms like data validation, conflict resolution, and version control should be considered as part of the CMDB’s foundational blueprint.

Governance framework

Even the best technology is ineffective without proper governance and processes. Enterprises need clear ownership, data quality standards, and a culture where the CMDB is seen as the authoritative source of truth.

It is also common for IT infrastructure teams to face multiple audits, including financial, security, risk, ISO 27001, and more. These audits become especially painful when:

- You lack a precise inventory of your software assets.

- Critical data is undocumented or has been lost due to employee churn.

- Software installations and removals happen without proper tracking.

Your CMDB should be designed and supported with a well-defined governance framework to ensure data security, access control, and compliance with regulations. The framework should establish clear ownership, roles, and responsibilities for data management. It should also define data access controls, audit trails, and retention policies to safeguard sensitive information and comply with industry standards.

A well-governed CMDB framework must address:

- Clear definitions and processes for data collection and updates across the organization. This ensures consistency and reduces ambiguity, ultimately promoting governance, risk mitigation, and efficient spend management.

- Structured data that clearly outlines the relationships between IT assets and business objectives. A well-governed CMDB empowers decision-makers with the context necessary to prioritize initiatives, assess the impact of changes, and justify resource allocation.

- The adoption of automation wherever possible to maximize productivity and staff satisfaction.

Disaster resilience

A CMDB serves as a critical resource during disaster recovery, but true resilience means considering the CMDB’s own survivability in a crisis. Although traditional backups can capture broad snapshots, it is important to validate whether those snapshots include the granular details of configurations, licenses, virtual machine settings, and complex interdependencies.

Consider designing your CMDB to maintain this critical data, which allows you to restore systems (not just raw data) with precision. This may imply architecting for geo-redundancy, where CMDB data is replicated across multiple locations to safeguard against regional disruptions, or utilizing a read-only CMDB access to allow limited query access for critical troubleshooting during an outage. Strict version control and backup strategies are equally essential, and exploring technologies for storing critical CMDB records in an immutable format can prevent tampering or corruption during the recovery chaos.

Another less popular but highly effective approach is proactively modeling scenarios for core DR intelligence and developing targeted contingency plans. Define recovery time objectives (RTOs) and associate them with each critical asset tier within your CMDB. Specifically, consider using CMDB data to map interdependencies and understand how failures cascade.

A clear focus on understanding the true impact of disasters yields various tangible benefits, as reflected in the following case studies:

- Organizations that use Device42, on average, resolve outages 10× faster and obtain a 4.8× return on investment.

- Device42 client’s remarkable disaster recovery transformation: recovery times reduced from seven days to only two and a streamlined DR team with a 60% smaller footprint (down from 65 to 25 employees).

Data storage efficiency

The design of a CMDB architecture should prioritize efficient data storage as a fundamental principle. To achieve storage efficiency, several key design principles must be considered.

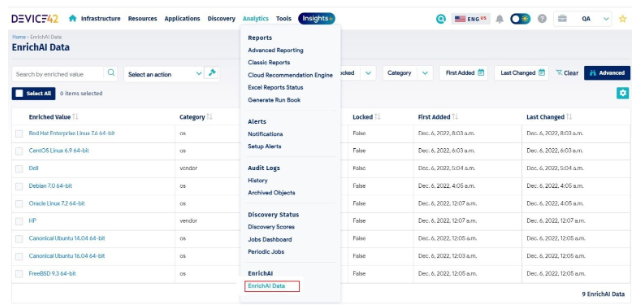

Database normalization minimizes redundancy and ensures the consistency of CI records. Device42, a next-generation CMDB, is designed to enrich discovered data through normalization and categorization out of the box. For example, instead of storing the vendor and model of a server repeatedly within each CI record, a normalized design would reference this information from a separate table, resulting in significant storage savings.

Normalized and enriched data using the Device42 EnrichAI service

Selective use of compression algorithms can further reduce storage footprints, provided that the impact on frequently accessed CI data is carefully evaluated. A thorough analysis of data access patterns should ideally reveal where compression yields a net benefit. That said, be sure to consider the potential performance impact of decompression so that it aligns with operational needs. For instance, the overhead of decompressing CI data in time-sensitive scenarios like change management or incident resolution can introduce significant delays.

Remember: Efficient data storage improves query performance and overall CMDB agility and directly contributes to OpEx reduction.

Considering that not all CI data is accessed with equal frequency, a tiered storage model will reduce cost by placing actively used CI information on high-performance (and more expensive) storage tiers. Conversely, less frequently accessed CI records, such as historical CI records or configuration information for retired assets, can reside on more economical storage solutions. This design principle effectively balances cost and performance requirements.

Conclusion

Many organizations struggle to realize the full potential of their CMDB investments: Gartner research indicates that only 25% see significant value. This low success rate often leads to a negative perception of CMDBs, but the fault lies more with implementation and usage than the technology itself.

While upfront setup costs are a natural concern, a poorly designed data model can lead to hidden inefficiencies that drive up long-term expenses. Critically, a CMDB must be built with flexibility in mind.

The right way to manage IT is with complete, 100% accurate visibility, and Device42 understands that a robust CMDB design is essential to achieve this. Our platform ensures optimized data structures for rapid data retrieval, seamless integration with automation tools to streamline workflows, and the ability to extract actionable insights that align IT with overall business objectives.

But don’t take our word for it. Learn how:

- SoftBank Corp leveraged Device42 for automated discovery, inventory, and data integration to reduce costs by 25% over five years, increase automation to 95%, and significantly lower support requests.

- Imperial College London utilized Device42’s real-time monitoring and alerting capabilities for proactive issue identification and resolution, resulting in improved uptime, faster incident response times, and enhanced staff confidence in system reliability.